Previously: Models of (Dependent) Type Theory.

There is a deep connection between mathematics and programming. Computer programs deal with such mathematical objects as numbers, vectors, monoids, functors, algebras, and many more. We can implement such structures in most programming languages. For instance, here’s the definition of natural numbers in Haskell:

data Nat where

Z :: Nat -- zero

S :: Nat -> Nat -- successorThere is a problem, though, with encoding the laws that they are supposed to obey. These laws are expressed using equalities. But not all equalities are created equal. Take, for instance, this definition of addition:

plus :: Nat -> Nat -> Nat

plus Z n = n

plus (S n) m = S (plus n m)The first clause tells us that is equal to

. This is called definitional equality, since it’s just part of the definition. However, if we reverse the order of the addends and try to prove

, there is no obvious shortcut. We have to prove it the hard way! This second type of equality is called propositional.

In traditional mathematics equality is treated as a relation. Two things are either equal or not. In type theory, on the other hand, equality is a type. It’s a type of proofs of equality. When you postulate that two things are equal, you specify the type of proofs that you are willing to accept.

Equality is a dependent type, because it depends on the values that are being compared. For a pair of values of the type , the identity (or equality) type is denoted by

:

(In this notation, assumptions are listed above the horizontal line.)

You’ll also see written as

, or even

, if the type

is obvious from the context.

When two values are not equal, this type is uninhabited. Otherwise it’s inhabited by proofs, or witnesses, of equality. This is consistent with the propositions as types interpretation of type theory.

For the identity type to be useful, we have to have a way to populate it with values — proofs of equality. We do that by providing an introduction rule:

The constructor generates a witness to the obvious fact that, for every

,

. Here,

is the abbreviation of reflexivity.

At first sight it doesn’t seem like we have gained anything by turning equality into a type. Indeed, if were the only inhabitant of

, we’d just buy ourselves a lot of headaches for nothing. The trouble is that, if there is a type

, then there’s also a type

(type of equality of proofs of equality), and so on, ad infinitum. This used to be a bane of Martin Löf type theory, but it became a bounty for Homotopy Type Theory. So let’s imagine that the identity type may have non-trivial inhabitants. We’ll call these inhabitants paths. The trivial paths generated by

are degenerate do-nothing loops.

But first, let’s consider the identity elimination rule: the mapping out of the identity type. What data do we need to provide in order to define a function from a path to some type

? In full generality,

should be a dependent type family parameterized by the path

as well as its endpoints

and

:

Our goal is to define a -valued mapping

that works for any path in

:

The trick is to realize that there is only one family of constructors of the identity type, , so it’s enough to specify how

is supposed to act on it. We do this by providing a dependent function

(a dependent function is a function whose return type depends on the value of its argument):

This is very similar to constructing a mapping out of a Boolean by specifying its action on the two constructors, and

. Except that here we have a whole family of constructors

parameterized by

.

This is enough information to uniquely determine the action of on any path, provided it agrees with

along

:

This procedure for defining mappings out of identity types is called path induction.

Categorical Model

We’ll build a categorical interpretation of identity types making as few assumptions as possible. We’ll model as a fibration:

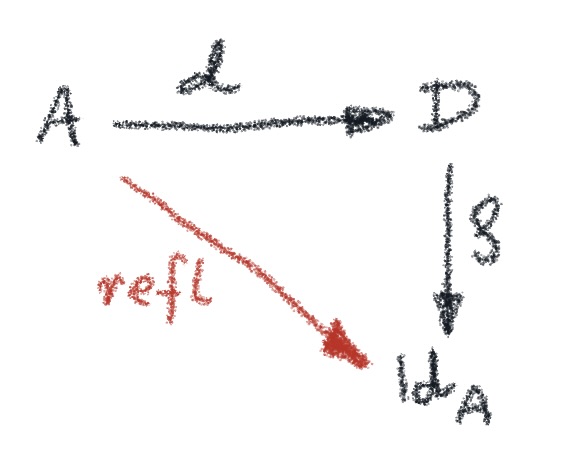

The introduction rule asserts the existence of the arrow:

For any given , this arrow selects a diagonal element of the identity type,

. When projected down to

using

, it lands on the diagonal of

. In a cartesian category, the diagonal is defined by the arrow

, so we have:

In other words, there is a factorization of the diagonal arrow.

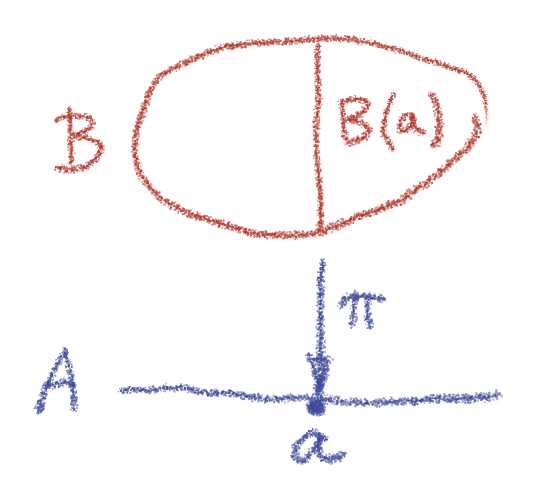

The elimination rule starts with defining a dependent type , which can be modeled as a fibration

:

We provide a dependent function , which is interpreted as an arrow

that maps

to an element

of

. So, when projected back to

using

, it produces the same element as

Given these assumptions, there exists a mapping:

which is interpreted as a section . The section condition is:

This mapping is unique if we assume that it agrees with when restricted to

:

Consequently, we can illustrate the path induction rule using a single commuting diagram:

If the outer square commutes then there is a unique diagonal arrow that makes the two triangles commute. The similarity to the lifting property of homotopy theory is not accidental, as we’ll see next.

Next: Modeling Identity Types.