Categories for Programmers. In the previous installment we discussed how to add logging to pure functions. See the Table of Contents.

Follow the Arrows

The Ancient Greek playwright Euripides once said: “Every man is like the company he is wont to keep.” We are defined by our relationships. Nowhere is this more true than in category theory. If we want to single out a particular object in a category, we can only do this by describing its pattern of relationships with other objects (and itself). These relationships are defined by morphisms.

There is a common construction in category theory called the universal construction for defining objects in terms of their relationships. One way of doing this is to pick a pattern, a particular shape constructed from objects and morphisms, and look for all its occurrences in the category. If it’s a common enough pattern, and the category is large, chances are you’ll have lots and lots of hits. The trick is to establish some kind of ranking among those hits, and pick what could be considered the best fit.

This process is reminiscent of the way we do web searches. A query is like a pattern. A very general query will give you large recall: lots of hits. Some may be relevant, others not. To eliminate irrelevant hits, you refine your query. That increases its precision. Finally, the search engine will rank the hits and, hopefully, the one result that you’re interested in will be at the top of the list.

Initial Object

The simplest shape is a single object. Obviously, there are as many instances of this shape as there are objects in a given category. That’s a lot to choose from. We need to establish some kind of ranking and try to find the object that tops this hierarchy. The only means at our disposal are morphisms. If you think of morphisms as arrows, then it’s possible that there is an overall net flow of arrows from one end of the category to another. This is true in ordered categories, for instance in partial orders. We could generalize that notion of object precedence by saying that object a is “more initial” than object b if there is an arrow (a morphism) going from a to b. We would then define the initial object as one that has arrows going to all other objects. Obviously there is no guarantee that such an object exists, and that’s okay. A bigger problem is that there may be too many such objects: The recall is good, but precision is lacking. The solution is to take a hint from ordered categories — they allow at most one arrow between any two objects: there is only one way of being less-than or equal-to another object. Which leads us to this definition of the initial object:

The initial object is the object that has one and only one morphism going to any object in the category.

However, even that doesn’t guarantee the uniqueness of the initial object (if one exists). But it guarantees the next best thing: uniqueness up to isomorphism. Isomorphisms are very important in category theory, so I’ll talk about them shortly. For now, let’s just agree that uniqueness up to isomorphism justifies the use of “the” in the definition of the initial object.

Here are some examples: The initial object in a partially ordered set (often called a poset) is its least element. Some posets don’t have an initial object — like the set of all integers, positive and negative, with less-than-or-equal relation for morphisms.

In the category of sets and functions, the initial object is the empty set. Remember, an empty set corresponds to the Haskell type Void (there is no corresponding type in C++) and the unique polymorphic function from Void to any other type is called absurd:

absurd :: Void -> a

It’s this family of morphisms that makes Void the initial object in the category of types.

Terminal Object

Let’s continue with the single-object pattern, but let’s change the way we rank the objects. We’ll say that object a is “more terminal” than object b if there is a morphism going from b to a (notice the reversal of direction). We’ll be looking for an object that’s more terminal than any other object in the category. Again, we will insist on uniqueness:

The terminal object is the object with one and only one morphism coming to it from any object in the category.

And again, the terminal object is unique, up to isomorphism, which I will show shortly. But first let’s look at some examples. In a poset, the terminal object, if it exists, is the biggest object. In the category of sets, the terminal object is a singleton. We’ve already talked about singletons — they correspond to the void type in C++ and the unit type () in Haskell. It’s a type that has only one value — implicit in C++ and explicit in Haskell, denoted by (). We’ve also established that there is one and only one pure function from any type to the unit type:

unit :: a -> () unit _ = ()

so all the conditions for the terminal object are satisfied.

Notice that in this example the uniqueness condition is crucial, because there are other sets (actually, all of them, except for the empty set) that have incoming morphisms from every set. For instance, there is a Boolean-valued function (a predicate) defined for every type:

yes :: a -> Bool yes _ = True

But Bool is not a terminal object. There is at least one more Bool-valued function from every type:

no :: a -> Bool no _ = False

Insisting on uniqueness gives us just the right precision to narrow down the definition of the terminal object to just one type.

Duality

You can’t help but to notice the symmetry between the way we defined the initial object and the terminal object. The only difference between the two was the direction of morphisms. It turns out that for any category C we can define the opposite category Cop just by reversing all the arrows. The opposite category automatically satisfies all the requirements of a category, as long as we simultaneously redefine composition. If original morphisms f::a->b and g::b->c composed to h::a->c with h=g∘f, then the reversed morphisms fop::b->a and gop::c->b will compose to hop::c->a with hop=fop∘gop. And reversing the identity arrows is a (pun alert!) no-op.

Duality is a very important property of categories because it doubles the productivity of every mathematician working in category theory. For every construction you come up with, there is its opposite; and for every theorem you prove, you get one for free. The constructions in the opposite category are often prefixed with “co”, so you have products and coproducts, monads and comonads, cones and cocones, limits and colimits, and so on. There are no cocomonads though, because reversing the arrows twice gets us back to the original state.

It follows then that a terminal object is the initial object in the opposite category.

Isomorphisms

As programmers, we are well aware that defining equality is a nontrivial task. What does it mean for two objects to be equal? Do they have to occupy the same location in memory (pointer equality)? Or is it enough that the values of all their components are equal? Are two complex numbers equal if one is expressed as the real and imaginary part, and the other as modulus and angle? You’d think that mathematicians would have figured out the meaning of equality, but they haven’t. They have the same problem of multiple competing definitions for equality. There is the propositional equality, intensional equality, extensional equality, and equality as a path in homotopy type theory. And then there are the weaker notions of isomorphism, and even weaker of equivalence.

The intuition is that isomorphic objects look the same — they have the same shape. It means that every part of one object corresponds to some part of another object in a one-to-one mapping. As far as our instruments can tell, the two objects are a perfect copy of each other. Mathematically it means that there is a mapping from object a to object b, and there is a mapping from object b back to object a, and they are the inverse of each other. In category theory we replace mappings with morphisms. An isomorphism is an invertible morphism; or a pair of morphisms, one being the inverse of the other.

We understand the inverse in terms of composition and identity: Morphism g is the inverse of morphism f if their composition is the identity morphism. These are actually two equations because there are two ways of composing two morphisms:

f . g = id g . f = id

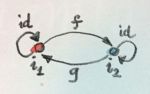

When I said that the initial (terminal) object was unique up to isomorphism, I meant that any two initial (terminal) objects are isomorphic. That’s actually easy to see. Let’s suppose that we have two initial objects i1 and i2. Since i1 is initial, there is a unique morphism f from i1 to i2. By the same token, since i2 is initial, there is a unique morphism g from i2 to i1. What’s the composition of these two morphisms?

The composition g∘f must be a morphism from i1 to i1. But i1 is initial so there can only be one morphism going from i1 to i1. Since we are in a category, we know that there is an identity morphism from i1 to i1, and since there is room for only one, that must be it. Therefore g∘f is equal to identity. Similarly, f∘g must be equal to identity, because there can be only one morphism from i2 back to i2. This proves that f and g must be the inverse of each other. Therefore any two initial objects are isomorphic.

Notice that in this proof we used the uniqueness of the morphism from the initial object to itself. Without that we couldn’t prove the “up to isomorphism” part. But why do we need the uniqueness of f and g? Because not only is the initial object unique up to isomorphism, it is unique up to unique isomorphism. In principle, there could be more than one isomorphism between two objects, but that’s not the case here. This “uniqueness up to unique isomorphism” is the important property of all universal constructions.

Products

The next universal construction is that of a product. We know what a cartesian product of two sets is: it’s a set of pairs. But what’s the pattern that connects the product set with its constituent sets? If we can figure that out, we’ll be able to generalize it to other categories.

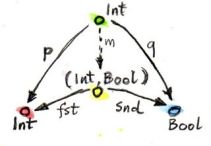

All we can say is that there are two functions, the projections, from the product to each of the constituents. In Haskell, these two functions are called fst and snd and they pick, respectively, the first and the second component of a pair:

fst :: (a, b) -> a fst (x, y) = x

snd :: (a, b) -> b snd (x, y) = y

Here, the functions are defined by pattern matching their arguments: the pattern that matches any pair is (x, y), and it extracts its components into variables x and y.

These definitions can be simplified even further with the use of wildcards:

fst (x, _) = x snd (_, y) = y

In C++, we would use template functions, for instance:

template<class A, class B>

A fst(pair<A, B> const & p) {

return p.first;

}

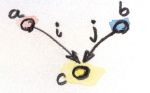

Equipped with this seemingly very limited knowledge, let’s try to define a pattern of objects and morphisms in the category of sets that will lead us to the construction of a product of two sets, a and b. This pattern consists of an object c and two morphisms p and q connecting it to a and b, respectively:

p :: c -> a q :: c -> b

All cs that fit this pattern will be considered candidates for the product. There may be lots of them.

For instance, let’s pick, as our constituents, two Haskell types, Int and Bool, and get a sampling of candidates for their product.

Here’s one: Int. Can Int be considered a candidate for the product of Int and Bool? Yes, it can — and here are its projections:

p :: Int -> Int p x = x q :: Int -> Bool q _ = True

That’s pretty lame, but it matches the criteria.

Here’s another one: (Int, Int, Bool). It’s a tuple of three elements, or a triple. Here are two morphisms that make it a legitimate candidate (we are using pattern matching on triples):

p :: (Int, Int, Bool) -> Int p (x, _, _) = x q :: (Int, Int, Bool) -> Bool q (_, _, b) = b

You may have noticed that while our first candidate was too small — it only covered the Int dimension of the product; the second was too big — it spuriously duplicated the Int dimension.

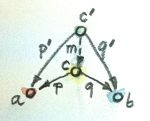

But we haven’t explored yet the other part of the universal construction: the ranking. We want to be able to compare two instances of our pattern. We want to compare one candidate object c and its two projections p and q with another candidate object c’ and its two projections p’ and q’. We would like to say that c is “better” than c’ if there is a morphism m from c’ to c — but that’s too weak. We also want its projections to be “better,” or “more universal,” than the projections of c’. What it means is that the projections p’ and q’ can be reconstructed from p and q using m:

p’ = p . m q’ = q . m

Another way of looking at these equation is that m factorizes p’ and q’. Just pretend that these equations are in natural numbers, and the dot is multiplication: m is a common factor shared by p’ and q’.

Just to build some intuitions, let me show you that the pair (Int, Bool) with the two canonical projections, fst and snd is indeed better than the two candidates I presented before.

The mapping m for the first candidate is:

m :: Int -> (Int, Bool) m x = (x, True)

Indeed, the two projections, p and q can be reconstructed as:

p x = fst (m x) = x q x = snd (m x) = True

The m for the second example is similarly uniquely determined:

m (x, _, b) = (x, b)

We were able to show that (Int, Bool) is better than either of the two candidates. Let’s see why the opposite is not true. Could we find some m' that would help us reconstruct fst and snd from p and q?

fst = p . m’ snd = q . m’

In our first example, q always returned True and we know that there are pairs whose second component is False. We can’t reconstruct snd from q.

The second example is different: we retain enough information after running either p or q, but there is more than one way to factorize fst and snd. Because both p and q ignore the second component of the triple, our m’ can put anything in it. We can have:

m’ (x, b) = (x, x, b)

or

m’ (x, b) = (x, 42, b)

and so on.

Putting it all together, given any type c with two projections p and q, there is a unique m from c to the cartesian product (a, b) that factorizes them. In fact, it just combines p and q into a pair.

m :: c -> (a, b) m x = (p x, q x)

That makes the cartesian product (a, b) our best match, which means that this universal construction works in the category of sets. It picks the product of any two sets.

Now let’s forget about sets and define a product of two objects in any category using the same universal construction. Such product doesn’t always exist, but when it does, it is unique up to a unique isomorphism.

A product of two objects a and b is the object c equipped with two projections such that for any other object c’ equipped with two projections there is a unique morphism m from c’ to c that factorizes those projections.

A (higher order) function that produces the factorizing function m from two candidates is sometimes called the factorizer. In our case, it would be the function:

factorizer :: (c -> a) -> (c -> b) -> (c -> (a, b)) factorizer p q = \x -> (p x, q x)

Coproduct

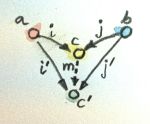

Like every construction in category theory, the product has a dual, which is called the coproduct. When we reverse the arrows in the product pattern, we end up with an object c equipped with two injections, i and j: morphisms from a and b to c.

i :: a -> c j :: b -> c

The ranking is also inverted: object c is “better” than object c’ that is equipped with the injections i’ and j’ if there is a morphism m from c to c’ that factorizes the injections:

i' = m . i j' = m . j

The “best” such object, one with a unique morphism connecting it to any other pattern, is called a coproduct and, if it exists, is unique up to unique isomorphism.

A coproduct of two objects a and b is the object c equipped with two injections such that for any other object c’ equipped with two injections there is a unique morphism m from c to c’ that factorizes those injections.

In the category of sets, the coproduct is the disjoint union of two sets. An element of the disjoint union of a and b is either an element of a or an element of b. If the two sets overlap, the disjoint union contains two copies of the common part. You can think of an element of a disjoint union as being tagged with an identifier that specifies its origin.

For a programmer, it’s easier to understand a coproduct in terms of types: it’s a tagged union of two types. C++ supports unions, but they are not tagged. It means that in your program you have to somehow keep track which member of the union is valid. To create a tagged union, you have to define a tag — an enumeration — and combine it with the union. For instance, a tagged union of an int and a char const * could be implemented as:

struct Contact {

enum { isPhone, isEmail } tag;

union { int phoneNum; char const * emailAddr; };

};

The two injections can either be implemented as constructors or as functions. For instance, here’s the first injection as a function PhoneNum:

Contact PhoneNum(int n) {

Contact c;

c.tag = isPhone;

c.phoneNum = n;

return c;

}

It injects an integer into Contact.

A tagged union is also called a variant, and there is a very general implementation of a variant in the boost library, boost::variant.

In Haskell, you can combine any data types into a tagged union by separating data constructors with a vertical bar. The Contact example translates into the declaration:

data Contact = PhoneNum Int | EmailAddr String

Here, PhoneNum and EmailAddr serve both as constructors (injections), and as tags for pattern matching (more about this later). For instance, this is how you would construct a contact using a phone number:

helpdesk :: Contact; helpdesk = PhoneNum 2222222

Unlike the canonical implementation of the product that is built into Haskell as the primitive pair, the canonical implementation of the coproduct is a data type called Either, which is defined in the standard Prelude as:

Either a b = Left a | Right b

It is parameterized by two types, a and b and has two constructors: Left that takes a value of type a, and Right that takes a value of type b.

Just as we’ve defined the factorizer for a product, we can define one for the coproduct. Given a candidate type c and two candidate injections i and j, the factorizer for Either produces the factoring function:

factorizer :: (a -> c) -> (b -> c) -> Either a b -> c factorizer i j (Left a) = i a factorizer i j (Right b) = j b

Asymmetry

We’ve seen two set of dual definitions: The definition of a terminal object can be obtained from the definition of the initial object by reversing the direction of arrows; in a similar way, the definition of the coproduct can be obtained from that of the product. Yet in the category of sets the initial object is very different from the final object, and coproduct is very different from product. We’ll see later that product behaves like multiplication, with the terminal object playing the role of one; whereas coproduct behaves more like the sum, with the initial object playing the role of zero. In particular, for finite sets, the size of the product is the product of the sizes of individual sets, and the size of the coproduct is the sum of the sizes.

This shows that the category of sets is not symmetric with respect to the inversion of arrows.

Notice that while the empty set has a unique morphism to any set (the absurd function), it has no morphisms coming back. The singleton set has a unique morphism coming to it from any set, but it also has outgoing morphisms to every set (except for the empty one). As we’ve seen before, these outgoing morphisms from the terminal object play a very important role of picking elements of other sets (the empty set has no elements, so there’s nothing to pick).

It’s the relationship of the singleton set to the product that sets it apart from the coproduct. Consider using the singleton set, represented by the unit type (), as yet another — vastly inferior — candidate for the product pattern. Equip it with two projections p and q: functions from the singleton to each of the constituent sets. Each selects a concrete element from either set. Because the product is universal, there is also a (unique) morphism m from our candidate, the singleton, to the product. This morphism selects an element from the product set — it selects a concrete pair. It also factorizes the two projections:

p = fst . m q = snd . m

When acting on the singleton value (), the only element of the singleton set, these two equations become:

p () = fst (m ()) q () = snd (m ())

Since m () is the element of the product picked by m, these equations tell us that the element picked by p from the first set, p (), is the first component of the pair picked by m. Similarly, q () is equal to the second component. This is in total agreement with our understanding that elements of the product are pairs of elements from the constituent sets.

There is no such simple interpretation of the coproduct. We could try the singleton set as a candidate for a coproduct, in an attempt to extract the elements from it, but there we would have two injections going into it rather than two projections coming out of it. They’d tell us nothing about their sources (in fact, we’ve seen that they ignore the input parameter). Neither would the unique morphism from the coproduct to our singleton. The category of sets just looks very different when seen from the direction of the initial object than it does when seen from the terminal end.

This is not an intrinsic property of sets, it’s a property of functions, which we use as morphisms in Set. Functions are, in general, asymmetric. Let me explain.

A function must be defined for every element of its domain set (in programming, we call it a total function), but it doesn’t have to cover the whole codomain. We’ve seen some extreme cases of it: functions from a singleton set — functions that select just a single element in the codomain. (Actually, functions from an empty set are the real extremes.) When the size of the domain is much smaller than the size of the codomain, we often think of such functions as embedding the domain in the codomain. For instance, we can think of a function from a singleton set as embedding its single element in the codomain. I call them embedding functions, but mathematicians prefer to give a name to the opposite: functions that tightly fill their codomains are called surjective or onto.

The other source of asymmetry is that functions are allowed to map many elements of the domain set into one element of the codomain. They can collapse them. The extreme case are functions that map whole sets into a singleton. You’ve seen the polymorphic unit function that does just that. The collapsing can only be compounded by composition. A composition of two collapsing functions is even more collapsing than the individual functions. Mathematicians have a name for non-collapsing functions: they call them injective or one-to-one

Of course there are some functions that are neither embedding nor collapsing. They are called bijections and they are truly symmetric, because they are invertible. In the category of sets, an isomorphism is the same as a bijection.

Challenges

- Show that the terminal object is unique up to unique isomorphism.

- What is a product of two objects in a poset? Hint: Use the universal construction.

- What is a coproduct of two objects in a poset?

- Implement the equivalent of Haskell

Eitheras a generic type in your favorite language (other than Haskell). - Show that

Eitheris a “better” coproduct thanintequipped with two injections:int i(int n) { return n; } int j(bool b) { return b? 0: 1; }Hint: Define a function

int m(Either const & e);

that factorizes

iandj. - Continuing the previous problem: How would you argue that

intwith the two injectionsiandjcannot be “better” thanEither? - Still continuing: What about these injections?

int i(int n) { if (n < 0) return n; return n + 2; } int j(bool b) { return b? 0: 1; } - Come up with an inferior candidate for a coproduct of

intandboolthat cannot be better thanEitherbecause it allows multiple acceptable morphisms from it toEither.

Next: Simple Algebraic Data Types.

Bibliography

- The Catsters, Products and Coproducts video.

Acknowledments

I’m grateful to Gershom Bazerman for reviewing this post before publication and for stimulating discussions.

Follow @BartoszMilewski

January 11, 2015 at 7:11 pm

Hello Bartosz,

firstly, thank you for this series! I find it very well written and interesting.

Up to now, everything was clear for me. However, in this installment I got a little confused about opposite categories. From what I gather for every category there is opposite category and we can define one by reversing arrows. Reversing arrows twice gets us back to initial category.

Here is the confusion:

For category of sets, if we reverse morphism Empty -> A, we get A -> Empty, which is not a function. How can we get back from category opposite to Set back to Set if A -> Empty does not exist and therefore there is no arrow to reverse?

Thank you for all your time.

January 11, 2015 at 11:18 pm

Hi Adam,

When you reverse arrows in Set, you don’t get Set back. You get a different category called Setop. What was called Empty in Set can no longer be interpreted as an empty set.

Think of the Set category as a schematic drawn with arrows and dots. We derived this schematic by looking at actual sets and functions between them, but then we forgot about sets and functions and just stare at dots and arrows. When we flip all the arrows, we get a different schematic, which still describes a category, but this new category is no longer tied to sets and functions.

January 12, 2015 at 12:51 pm

That is clear. Thank you.

January 15, 2015 at 8:48 am

Really well written post. I enjoy this series a lot and hope you will show even more interesting applications of category theory.

March 4, 2015 at 10:19 am

What does it mean “unique morphism from a to b”. Does it mean that there is only single arrow from A to B?

March 4, 2015 at 1:29 pm

@Dima: Yes.

March 13, 2015 at 1:08 pm

[…] those that involved relationships between objects. We used universal constructions to define a product type and a coproduct types. We can use the same trick to define a function type. We will need a pattern that involves three […]

March 31, 2015 at 3:56 am

from the “products” section on this “chapter” gets rather confusing, and the practical purpose of the exposed concepts is completely unclear (at least to me). It reads more like a list of definitions than anything that ties into anything we’ve seen until these sections.

I’d really love if you could revise these sections and give more context and insight into why these concepts are relevant and useful. also I think the concepts are really simple and much of the current exposition could be trimmed and replaced by some useful practical insight like I’ve already said.

May 25, 2015 at 4:25 am

Grammar/typo nit… “ways of composing two morphism:” seems like morphism should be plural. These articles are of high quality. Thank you for them.

June 4, 2015 at 3:51 pm

@elburabure, just wanted to give you my answer about the practical purposes. As for me, I see here two main advantages for now:

1). Paradigm shift from object-based thinking to the relation-based one (yeap, it is hard to do – but if you want to feel that one fully – you will, you just need more time to understand it: just keep doing work on it).

2). Ability to give the mathematical model for code.

About second one you could read in the chapter “Types and Functions” subsection “Why Do We Need a Mathematical Model?”. And also in the next chapter there is interesting insight about the types algebra.

About the first – all of those definitions are about relations without any concern of the internal structure of the objects and that is why the shift is happening. It is like upgrading the old-school context of the Sets to the ‘new’ Categorical framework.

Interesting to notice that if any two objects X and Y related by an isomorphism F: X \to Y then they have a very important property: the class of morphisms from X (resp. into X) is in one-to-one correspondence with the class of morphisms from Y (resp. into Y). It means that we can’t distinguish between them by interaction and that is why they are actually ‘the same’ in the category theory.

If we ask ourselves – can we collapse the structure (objects collection) into an object that captures the collective behavior? Then the most trivial answer will be product and sum constructions.

Product of the two objects A and B is the new object C which represents the incoming relations interface for interacting with them. It captures incoming ‘social life’. Sum is the same but for the outgoing relations (duality principle here is in the action).

So that’s all is about doing system parts composition and architecture. That is what the programming is in the global sense.

Hope that my answer will help you somehow! Please ask more if you will need help.

July 6, 2015 at 4:52 pm

Could you please add a few more examples of usual objects defined with category theory? In particular it would be interesting to see how natural numbers are implemented there (I mean, it is one of the most used things, and I think that it could give a lot of intuition for how to work with categories).

July 9, 2015 at 9:44 am

First question: What does “it is unique up to unique isomorphism” ever mean? I read the text as there can be any number of isomorphic (initial) objects. Well, it seems that you are saying that we can think of another isomorphism with another equivalent node but once isomorphism is defined, we can have one counterpart at most?

@Constantine Kharlamov I think that they have posted two examples with integer numbers: the multiplication and addition groupls. Both are monoids, which is expected since you have only one object (one type).

July 9, 2015 at 9:50 am

It is also ridiculous to hear that higher cardinality sets enclosed between sets of cardinality 0 and 1.

July 9, 2015 at 10:05 am

@Валентин: There can, in principle, be multiple initial (or terminal) objects, but they are all isomorphic. Pick two such objects and an isomorphism f between them. Any other isomorphism between these two object must be equal to f (it’s a fancy way of saying that there can be only one such f).

You might be wondering what equality means when you speak of morphisms. That’s a good question and it leads to some interesting theories such as enriched categories, n-categories, HOTT, and univalence. But that’s way beyond the scope of this blog.

July 19, 2015 at 8:40 pm

Here is another question. Your last section, where you say that single domain unit () is mapped to all codomain types does not make sense at all and that is the essense of surjective functions: At first, unit () is a terminal in your category (theory) and I wonder how is it mapped anywhere. Secondly, function can have only one value and you cannot map single point to multiple targets. Third, all functions are surjective (total) and surjectivity stands for for covering the whole domain (defined for every argument x and that is why they are total), I have checked that in WP regarding this. Functions that tightly fill their codomain are called “injective”. So, you have put everything upside down, as it seems, and I could not understand what you are talking about embedding for this reason.

July 20, 2015 at 1:30 pm

@Valentin: I think you have confused some definitions. It’s true that a function must assign a single value to each argument.

The functions we are dealing with here are “total,” which means that they are defined for every argument in their domain. This is normal in mathematics, but in programming we sometimes have functions that are undefined for some arguments (for instance, they throw exceptions).

Functions don’t have to be injective or surjective. So you can define functions from a single-element set, corresponding to (), to any non-empty set. Such a function just picks one single value from its co-domain. It is not surjective, but it is injective.

Being a terminal object is a statement about incoming morphisms. It doesn’t impose any restrictions on outgoing morphisms.

Injective, or “one-to-one,” means that different arguments are mapped to different values. It “injects” or “embeds” its domain into the co-domain.

Surjective, or “onto,” means that the whole co-domain is covered.

July 22, 2015 at 4:05 am

I am rereading your excellent posts. I suspect it will take me many more tries until it clicks. This time I am trying to really understand the detail instead of simply trying to catch some buzzwords or rough ideas. I apologize if my questions below are pedantic.

In the Products section.

The third diagram, the one with

m :: c'->c(call it diagram 3)In diagram 3 the morphisms p’ and q’ represent the worse (not better) candidates, I believe. That is, p and q represent the better (more universal) morphisms. As the text progresses you state:

I am either confused, or I think the renaming from Diagram 3 to the equations threw me.

Given the labeling in diagram 3, in the equations, should p and q not be p’ and q’ respectively? Likewise, are fst and send, in the equations, replacements for p and q respectively, in the diagram?

If this is not the case then I must really not understand. If it is the case then I think the pedagogy would be easier to follow if the labels in the diagram matched the variables in the equations.

My second question also relates to the Products section. You show that c is better than c’ for two examples of c’ (and its pair of projections p’ and q’). You the show that c’ is not better than c for the same two examples. Then, you seem to close by stating c (with p and q) is the best (most universal). This seems to be a proof by two examples. I suspect you intentionally did this because the proof would be too involved at this point, but then again it seems really fundamental to continuing. How would one go about proving that c really is the best (most universal)? I think understanding how you jump to “that makes the Cartesian product (a,b) our best match” would really help me clarify some of the connections that you seem to grasp so firmly. Of course, I may just be missing something as well, but I can’t put my finger on what it might be.

Thank you.

July 22, 2015 at 11:14 am

@jjj: I see why it may be confusing. I’m switching from the general formula back to example 1, in which p and q were defined as:

They would correspond to p’ and q’ in the general diagram, while fst and snd correspond to p and q.

I’ll edit the text to make this jump more explicit.

As for the proof: I gave the examples to show the general pattern. It’s interesting to see what choices are available and why they are not universal.

But the formula:

is general. It shows that for any c, p, and q you can build an m. I am not proving that it’s unique. Maybe this should be an exercise?

July 22, 2015 at 5:43 pm

Bartosz, I appreciate your thoughtful replies to my questions. I very much hope that my questions or comments are not an annoyance. I tell myself that others might have similar questions and the back and forth might be helpful for your text. I hope some part of that is true.

I reviewed your last answer and spent some hours thinking. Regarding the proof that c,p,q is a universal construction, I believe it would be better as a show-and-tell than as an exercise. At this point in the reading, I really cant see how to proceed.

The first question I asked myself is whether a universal construction is any of the “matches” or is the “best match” as defined by some ranking. I suspect those two are the same since the ranking could be vacuous and the match could be object. Then, every object in the category would be an example of a universal construction. Is this correct?

This led me to the realization (though I think it is stated throughout the text, it still had to occur to me) that within a category there are potentially many examples of universal constructions. They are basically objects and morphisms where each plays a given role and follows certain rules. The role idea seems relevant if one considers a set of objects and morphisms that form a universal construction. I suppose it is possible that that same set of objects and morphisms could also represent the same universal construction where the objects and morphisms are permuted with respect to their roles in the universal construction. I don’t know if this is possible, though my gut tells me it is, and that it really means I did not pick the right universal construction that would subsume the permutations as part of its definition.

Returning to the proof, I began considering the nature of candidates for the object c. We call the best one (a,b), but that seems to leak information about the contents of the object. I realize it is just a notational convenience, but it still seemed important to me to consider the contents of c as opaque. In that case, I know that c must contain one element for each pair (a,b). The elements do not need to be anything special (in fact, I am not supposed to think about what they might be). Of course, I once again considered the contents of c. I wonder, then, how would I define c using only the properties of the morphisms, p and q. I considered stating that p or q had to be injective or surjective or something like that. But p and q are independent morphisms – they do not produce a value based on the value produced by the other.

// Let a1,a2 be elements of a, and b1,b2 elements of b, etc.

Say, c contains { (a1,b1), (a2,b2) }. Please ignore the fact that I am assuming a set here, I am not skilled enough to express myself otherwise. My initial thought was that this c could not be a candidate for product since p and q are independent and I want to exclude the pairs { (a1,b2), (a2, b1) }. Then, it again occurred to me that c just contains “things”, not pairs.

So, (letting c1=(a1,b1) to ignore the visual leakage)

p :: c->a

p x = if (x==c1) then a1 else a2

q :: c->b

q x = if (x==c1) then b1 else b2

these two functions (excuse the mix of C/Haskell) would indeed only result in the two pairs in c. Of course, I am not saying it is the best match, just that this c,p,q fits the rough pattern.

This got me thinking about your example. Can Int be considered a candidate for the product of Int and Bool? You then gave two projections. I understand, they are not the only ones and it was just an example. However, what if I give two different projections.

p :: Int -> Int

p x = x/2 // Integer division

q :: Int->Bool

q x = (x mod 2 == 0) then True else False

It seems that if c contains enough elements (|a|*|b|) then I can establish a mapping that will work. I think, however, that these mappings and the object c are isomorphic to the mappings you have called (fst and snd) along with object (a,b).

This then leaves me in a state where I still don’t know how to pursue the proof. I keep appealing to the actual definitions of the morphisms and, worse, the contents of the objects. My universal construction, I would think, should be built without such dependencies. But, I don’t know how to constrain c or p or q. This is typical for me with category theory. The proof is a game, there are some rules (moves) and there is a goal. I can’t quite put my finger on the full set of moves that I am allowed to make, and, in this case, I am not really sure how to phrase the goal. I feel that I am not allowed to look into the objects, but I don’t see how to prove it otherwise.

I hope I am not too presumptuous by asking such a long question. I truly appreciate your time and I will understand completely if my question would take too much it.

July 22, 2015 at 8:19 pm

@jjj: I added one more picture that might help avoid the confusion.

July 23, 2015 at 12:23 pm

@jjj: One of the reasons I’m posting these chapters in a blog is to solicit feedback. One kind of feedback serves the purpose of catching errors in my presentation. The second kind is to find the best, most accessible, explanations. If something is not clear, I will try to improve it. Your feedback is valuable to me.

I keep switching between the general view and examples. When I define universal constructions, I have to use the most general categorical language. No peeking at object, just follow the arrows.

There are many different universal construction but they share the same general description: you define a particular pattern of objects and morphisms, you establish a ranking between matches, and then you pick the best match (if it exists) that can be uniquely mapped to any other match (or that can be uniquely mapped from any other match).

Product and co-product are examples of such universal constructions. They are also very general: no peeking at objects.

And then you have examples of these constructions in concrete categories, in particular in Set. In Set objects are sets and morphisms are functions and you are free to peek inside sets. You translate the particular universal constriction to Set and you can see what it does to elements. When you write Haskell code, you are essentially in Set.

Notice that there are no condition on c, p, or q, except that p must map c to a and q must map c to b. You can pick absolutely anything.

When you’re talking about the proof, what are you trying to prove?

July 23, 2015 at 5:43 pm

Its funny that you ended your last post with the question “what are you trying to prove”. You also seem to have recognized where I might be getting confused by separating the abstract proof from the concrete proof. I think this distinction was, indeed, a sticking point. I did not know what I was trying to prove because I did not recognize I was actually trying to prove two different things.

Both proofs are at https://en.wikiversity.org/wiki/Introduction_to_Category_Theory/Products_and_Coproducts

The first proof is “the product of any two objects is unique up to isomorphism”.

Reading the proof on that site I find that it makes sense though I still don’t see how the uniqueness of g in the equations

f1 . 1x = f1 = pi1 . g = f1 . h . g

f2 . 1x = f2 = pi2 . g = f2 . h . g

Lead to the fact that 1x = h . g

We cannot cancel f1 as we would with multiplication. So, is it one or both of the equations above along with the uniqueness of g that leads to 1x = h . g and how do they lead to it. Perhaps, I just need to stare at it a bit longer.

The second proof I was after is “the Cartesian product of non-empty sets is a product in the category of sets”. This is the concrete proof. I should likely go through the first 300 pages of Lawvere’s Conceptual Mathematics to get a real gut feeling for this with respect to the category of sets and the category of sets with an endofunctor, etc. He moves at a very slow pace with many examples.

I find it very interesting to read your posts as they walk a line between Lawvere and Mac Lane, plus Lawvere never makes it to natural transformations. Your explanation using the idea of ranking was very helpful. I had not seen that approach before. I also find your constant return to computer science and type theory extremely useful. That is my background and most math texts and even cat theory comp sci papers do a poor job of drawing connections. The choice of C++ is a bit tough. I have not used it for 15 years. Lately everything is C# and JavaScript.

I am reading about category theory not just as a curiosity, but also because I spent a number of years developing a syntax-free programming environment based on the composition of declarative primitives which are stored as configuration in a database. My business users can visualize the primitives using a simple GUI and they can and do modify very complex system behavior without requiring a recompile or a redeploy or even a chat session with a developer. I began noticing that certain properties needed to be retained across composition. I, unfortunately, could not formalize my ideas with my math background. My primitives maintain history which leads me to believe I need to use comonads to do the modeling. I suspect a long journey ahead before I can formalize the system.

Best regards.

July 23, 2015 at 6:58 pm

I added a link to the excellent Catster video about products and coproducts.

August 26, 2015 at 12:21 am

Kinda got confused with the “shape” metaphor there.

From what I understood, a shape is a pattern that the arrows form. All right, pick one (oh, and is what I pick immaterial?) and find the best in the quantitative sort way.

And then, there was this – “The simplest shape is a single object.”. With my understanding of “shape”, this didn’t make sense at all! In this context, does it mean the identity morphism? Or all the arrows that go away from this single object? Or is my complete understanding wrong?

August 26, 2015 at 12:48 am

Here are the exact words: “pick a pattern, a particular shape constructed from objects and morphisms.” So it’s not just arrows. It’s objects connected by arrows. The one-object pattern is a degenerate case where the number of objects is one and the number of arrows is zero. Zero is as good a number as any.

September 6, 2015 at 6:33 am

I hope you don’t mind if I spoil two of the challenges, but I’d like to know if I have them correct and couldn’t find the answers elsewhere.

Assume we have a poset with the relation less-than-or-equal-to. Then product of two objects in the poset is the lesser of the two, while their coproduct is the greater of the two. (Thus posets are symmetrical in a way that functions are not.)

I had to read this chapter about five times in order to understand it, so if I got this right, I’m going to be thrilled. Thanks for a great series!

September 6, 2015 at 10:54 pm

@brianberns: Almost there, except that you’re assuming that any two objects can be compared. That’s true in total order, but not in partial order.

Think of a company with the relation “X is the boss of Y,” with the assumption that “the boss of my boss is also my boss,” and “everybody is the boss of themselves.” There may be many people who are not in this relationship. They are either teammates, or work in different departments.

BTW, who’s the initial object in this category?

September 7, 2015 at 4:41 pm

Ah, that makes sense. So the product in a poset is actually the “lowest common boss” of the two objects. Thank you.

I think the initial object would be the CEO of the company (if there is one), yes?

September 7, 2015 at 6:11 pm

Yes!

December 2, 2015 at 9:32 am

Hi Bartosz,

Thanks so much about this! There seem to be alternative definitions of products using (endo)bifunctors and natural transformations. I am using Lambek’s paper http://link.springer.com/chapter/10.1007%2FBFb0079385

“A cartesian category is a quintuple

C: A category

T is an object of C

/: C x C -> C is a bifunctor, and alpha, beta are

natural isomorphisms as follows:

alpha(A): 1 -> [A,T] ,

beta(A,B,C): [C,A] x [C,B] -> [C,A /\ B] .

Here 1 is “the” one-element set, and [A,B] is short for

HomC(A,B).

Thus T is “the” terminal object of C

and /\ is the usual categorical product.

I am not sure I grasp this definition. Are you going to be explaining such constructions later?

December 4, 2015 at 8:12 am

Typo:

/: C x C -> C is a bifunctor

December 6, 2015 at 10:39 am

Hello Bartosz,

There’s something unclear to me about the product. I seems to me that both

(Int, Bool) and (Bool, Int) are equally good candidates for the product of Int and Bool, so they should be equal up to unique isomorphism. But I can find the following two isomorphisms (Int, Bool) -> (Bool, Int) :

iso1 (x,y) = (y, x)

iso2 (x,y) = (not y, x)

What am I missing?

Thank you very much for this series by the way!

December 6, 2015 at 12:45 pm

Your iso1 is the more general isomorphism. Its polymorphic version is called

swap:It happens to be its own inverse (apply

swaptwice and you get back to your starting point).Your iso2 is also a good isomorphism, but it only works when the second part is

Bool:It’s inverse is

Notice that

notis an isomorphism of booleans.December 8, 2015 at 4:18 pm

Hello!

I wonder, is there a better of example of coproducts in C++ (C#, Java ) then tagged unions? In general, tagged unions are something you’d like to avoid, unless you are dealing with some low level code, where its usage is justified. This creates an impression that the idea of coproducts is not that useful for regular programmers. Or is it different for Haskell? That Either type, is it used much in everyday Haskell programming?

December 9, 2015 at 3:24 pm

@Leonid: The implementation of tagged unions in C++ is awkward and their usage unnecessarily complicated. In Haskell they are very simple to use, because Haskell supports pattern matching. There is talk of introducing pattern matching in C++, so things might improve.

January 15, 2016 at 1:54 am

Hi Bartosz,

Thanks for the great series. In challenge 5, I implement the ‘m’ function in Java like so:

with IntCoProduct just a wrapper of Integer as coproduct. Notice the calls e.getLeft() and e.getRight(). If presented on a graph, there would be no arrows coming from e to either left or right originally. These getters are introduced as value pickers from Either and obviously are inverted arrows of Either’s constructors, thus allowing composing i with these new arrows. My question is: Where are these arrows actually from? Do I get them automatically when having the Either object?

There’s another interpretation I could think of, is that these getters are actually just a way to extract Either’s internal value, not actually belong to the category. Am I right?

January 15, 2016 at 10:56 am

Btw, here’s my take on the last challenge:

There’re 2 acceptable morphisms from int to Either:

January 15, 2016 at 4:19 pm

@Huy Le Van: In 5,

mis supposed to return anInteger.In 8, I actually made a mistake, which I have just corrected: There should be multiplems fromEither, not toEither. Sorry about that.January 17, 2016 at 2:21 pm

If one

mfromEithertointwas enough to disqualifyintas a ‘better’ candidate thanEither, why would you want to have multiplems?Btw, in the previous comment, I said that

IntCoProductwas just a wrapper ofint, so that I could haveiandjas its members. I could’ve written:My question remains: Where do the getters come from? Are they just the ways to extract internal value of

Either, not arrows between objects in our category?January 18, 2016 at 4:34 pm

@@Huy Le Van: Points for making me doubt myself 😉 The original problem 8 was correct (I reverted the change). Your solution is correct. Neither i nor j have number 2 in their image, so your m has the freedom to map it to Either(true) or Either(false). Your trick is reminiscent of the Hilbert’s Grand Hotel. You made three rooms avaliable by shifting guests forward by 3 rooms.

January 18, 2016 at 6:36 pm

You didn’t answer my second question about

Either‘s getters. Would you? I’ve read this chapter more than 5 times trying to understand everything. This is the only question I have left.January 19, 2016 at 9:03 pm

Sorry about that.

Getters for a sum type are partial functions. If you call GetLeft on an Either that is Right, what do you expect to happen? You could return an option or Maybe, but that’s a different type (and an exception would be yet another type). So getters are not morphisms, which are supposed to be total functions.

The closest to a getter in the pure-function world is a function that takes two handlers, one for Left values and one for Right values. That’s equivalent to Haskell pattern matching.

January 19, 2016 at 9:37 pm

Thank you, Bartosz. It’s all clear to me now.

April 7, 2016 at 3:07 am

I’ve been wondering: what if we have a product like construction with more than two arrows — let’s say, three? Is it still a product?

I’m curious because e.g. the Wikipedia article defines product to have just three objects and two arrows.

April 7, 2016 at 9:20 am

@Constantine: It’s still called a product. You can have more (or less) than two arrows (projections). Such diagrams define “finite products”. A category that has all finite products is called a cartesian category.

As for the Wikipedia article, the diagram you mentioned generalizes the idea to a set of objects, not necessarily countable. For instance, you could define a product in which objects are indexed by real numbers rather than integers. An example would be sets of reals that are greater than a given x. You could try to form a product of all such sets.

May 31, 2016 at 7:55 pm

@BartoszMilewski,

The “boss” category you propose, above in #27, perfectly illustrates a conceptual difficulty I’m having with your definition of initial object criteria: “at most, one morphism going to any other object.”

By that criteria, don’t all bosses in the category qualify as the initial object? (No boss would have multiple morphisms going to any particular subordinate, right?) It seems what makes the CEO unique among bosses is that he has no morphism going to him.

But, I seem to recall you saying, somewhere else, that initial object qualification has nothing to do with morphisms going to an object, but only with morphisms going from an object. I’m confused by this and would appreciate any light you can shed. Thanks!

May 31, 2016 at 8:07 pm

*** SPOILER ALERT ***

Potential answer to Challenge #7, below.

@BartoszMilewski,

Is the point of Challenge #7 to notice that the precise “gap” (i.e. – two elements) introduced by the i() injector produces an isomorphism between the injectors and Either representations, because it provides zero information loss in either direction? (That is, there is no longer any ambiguity, regarding values ‘0’ and ‘1’; they had to come from the j() injector.)

May 31, 2016 at 8:26 pm

The initial object must have a morphism going to (be a boos of) any object (employee). A division manager is not the boss of the CEO.

June 1, 2016 at 6:54 am

Oh, I see. You mean that the initial object must have a morphism going to all objects in the category; right?

Suggestion: in the definition of initial object, above, change the verbiage, “any object,” to, “any and all objects.”

Thanks!

July 31, 2016 at 3:26 am

So, is a terminal object being a coproduct? As far as I see it satisfies the requirements.

Also, I noticed that all examples on (co)products have them defined for all objects in a category, is it an accident? As I see from definition, they could exist just for a few objects of many ones in a category, right?

July 31, 2016 at 9:45 am

Why don’t you try to prove that the terminal object is a coproduct?

It’s true that a coproduct could exist for some objects and not others.

July 31, 2016 at 10:30 am

Because for I never worked with Category Theory before, I’m afraid of making a mistake which is impossible to check. There’s no calculator, or something, to check whether am I right. Or there is? You know, if people could always trust themselves, programming languages would never got type systems ☺

Proof: a Coproduct have incoming arrows from objects, and so have a Terminal Object. A Coproduct should also have an outgoing arrow to objects (if they exist) having incoming arrows from every object, having an arrow to Coproduct. So, a category with a Terminal Object may have an object with arrows from every object except of Terminal Object, because its definition doesn’t say anything about outgoing morphisms. Hence, in general, Terminal Object is not a coproduct. Q.E.D.

July 31, 2016 at 11:08 am

Notice that the definition starts with “A coproduct of two objects a and b.” So what are the two objects you’re talking about?

July 31, 2016 at 11:36 am

Hm… Well, though that would simplify the proof, but, a Product could consist of more than just two objects (acc. to Wikipedia), hence, duality of Coproduct to Product implies that Coproduct could consist of more than just two objects too.

July 31, 2016 at 1:30 pm

So you want to prove that the terminal object is the coproduct of all objects in a category? But it’s not! I can find a better candidate, say

Bool. There is a function from any type toBooland there is a unique factorizing function from Unit

()(the terminal object in Hask) toBool:I’m not saying that

Boolis the coproduct of all types (it’s not!), but its existence proves that the terminal object Unit is not!(I think you mistakenly assumed that there can be no morphisms going out of the terminal object. Yes, the name is a little misleading.)

July 31, 2016 at 2:56 pm

Err… But, isn’t that what I proved? I proved that Terminal Object is not a Coproduct, but with a bit another way: because Terminal Object definition says nothing about an outgoing morphisms.

September 1, 2016 at 1:35 am

Hi Bartosz, I’m a bit stuck with consciouscircle’s attempted counterexample with (Bool,Int) as a possible alternative product for Int and Bool that doesn’t have a unique isomorphism to (Int,Bool). So, he demonstrates that

iso1 (x,y) = (y, x)

iso2 (x,y) = (not y, x)

are two possible isomorphisms between (Int,Bool) and (Bool,Int), and you say that iso1 is more general since it’s parametric.

What confuses me is my understanding that the product of two objects is normally determined on a case-by-case basis. So, it’s just a nice feature of Haskell that we can express (a,b) as the product of any two objects in Hask, but the general discussion of products in category theory is supposed to be monomorphic, am I wrong?

So, from this lens, iso1 and iso2 should be equally general for the specific case of Int and Bool. At this point, I feel like we either have to drop the uniqueness requirement for the isomorphism between candidates of a product or have to say that Int and Bool have no product in Hask.

September 1, 2016 at 5:39 pm

Thank you for keeping me on my toes. Indeed, it would seem that the isomorphism is not unique. However, you have to take into account that a product is not just a type. It’s a type plus two projections. So we are really talking about three candidates here:

iso1maps 1 to 2, whereasiso2maps 1 to 3.September 1, 2016 at 8:45 pm

Wow, thanks, this clears it up nicely!

November 4, 2016 at 1:09 pm

Hi!

How are injections different from morphisms?

November 4, 2016 at 3:44 pm

You mean, from other morphisms? They are morphisms that are part of this particular pattern. Compare them with projections, which are part of the product pattern.

February 14, 2017 at 3:49 pm

Bartosz = my new hero of teaching. You are the only one I have found that makes Category Theory at all simple. I find that books about it become clear only after I understand, but you have a way somehow of starting at the right level. I am a career programmer so perhaps that is why your approach works for me.

Thank You!

February 23, 2017 at 4:04 am

Thanks a lot for this series!

I have one comment. When you say:

“Here are some examples: The initial object in a partially ordered set (often called a poset) is its least element. Some posets don’t have an initial object — like the set of all integers, positive and negative.”

It is slightly confusing because “set of all integers” is not a category until a morphism is defined on it. So would it be more precise to say: “Some posets don’t have an initial object — like the set of all integers, positive and negative, where a morphism exists between two elements a and b, if a < b”.

Maybe that was implied/obvious in what you said. While one can come up with morphisms on the set of integers where initial objects do exist (like: “a morphism exits between a and b if |a| < |b|”), it was not was meant.

But the following threw me off:

“In the category of sets, the initial object is the empty set.”.

Without defining what are the arrows (in the category of sets), how can one claim that the initial object is the empty set?

February 23, 2017 at 10:02 am

@Kathick: You’re right. I made the changes you suggested. Thanks!

April 25, 2017 at 3:11 am

Hi Bartosz, thanks for doing this series, it’s really helpful for a non mathematician like myself! One minor thing – couldn’t you define the empty type in C++ like this:

class Void { Void() = delete; };Or am I missing something?

April 25, 2017 at 6:16 am

@Simon: It’s probably okay, although in C++ you never know 😉

August 15, 2017 at 7:37 am

@Bartosz, thanks for these great posts. Can you please tell me how one would describe addition and multiplication on natural numbers in terms of product and coproduct?

August 15, 2017 at 9:20 am

Take a category FinSet of finite sets and functions between them, and identify all sets that are isomorphic. It has an empty set 0, a singleton 1, a doubleton 2, etc. In this category 2 is a coproduct of two 1s, 3 is a coproduct of 1 and 2 or three 1s and so on. You can identify objects with natural numbers and addition with coproduct.

August 15, 2017 at 3:10 pm

@Bartosz, In that case what would be the explanation(justification) to prove that the 2 3 product has the best rank compared to 2 3 or 2 3 ?

August 15, 2017 at 10:17 pm

@Bartosz, Sorry some of the characters were dropped from my previous comment. Here I write it again:

In that case what would be the explanation(justification) to prove that the 2 3 product has the best rank compared to 2 3

or 2 3 ?

August 15, 2017 at 10:19 pm

for some reason it drops some of the characters. what I mean is how do we compare the product of (2,3) giving 6 versus (2,3) giving 12 versus (2,3)giving 4?

August 16, 2017 at 10:27 am

Let’s do a simpler example: why is 1+1 two and not three? First, how is 1+1 two? Because there are two injections, functions from a singleton object to a doubleton. One picks one element, the other picks the other element. But three has the same property — in fact it has three possible injections, but let’s just pick the first two. But two is a better coproduct because there is a unique function from two to three that picks exactly the same elements. Let me write it in Haskell:

September 27, 2017 at 12:18 pm

So are you saying coconut is a nut!

September 27, 2017 at 1:19 pm

Here’s my nerdy joke on this topic 😉

“Honey, Cocoon is on.”

“That’s because duality is an involution”

September 29, 2017 at 1:00 am

So, why are those constructions called universal? The Catster’s video seems to say that it is because all other candidates (to be initial, product…) are worse, in some sense. Is that right?

September 29, 2017 at 3:09 pm

They are universal because they define something by specifying its relationship to everything else in the category. You can’t just focus on a single object or a small neighborhood, you have to take in the whole category — the Universe in which your objects live.

September 30, 2017 at 4:47 am

Understood.

Thank you, teacher. 😉

October 25, 2017 at 7:49 pm

Thanks for Publishing this book.

I do not understand how regular multiplication is represented in category products. What exactly are the natural numbers? The morphisms or the objects? If the objects are the natural numbers, how can we map many different morphisms to the same numbers?

Thanks again

October 26, 2017 at 3:43 am

Categorical product generalizes cartesian product of sets: all possible pairs of elements of two sets. To multiply two numbers, you fist need a pair of numbers. This might seem trivial, but it’s essential when you want to generalize multiplication to arbitrary categories. Categorical product of an object with itself generalizes such a reservoir of pairs. Multiplication is then a morphism from this product back to the object. One such morphism gives you all possible multiplications. I come back to this in the chapter on monoidal categories.

October 26, 2017 at 5:15 am

ok. Still dont really understand but I will press on. Thank you.

January 27, 2018 at 5:28 pm

HI Bartosz,

I join the chorus thanking you for the excellent introduction. 🙂

I find there is a sizeable gap between the intuition you give for isomorphism (“two objects that have the same shape”) and the actual definition (“there is an inverse morphism between them”).

By “shape”, I take it to mean the objects connect to the same neighbors… something like graph isomorphism. Maybe this is the mistake I am making.

Consider a category containing three objects A, B and C. There is one morphism from A to C and another from B to C. By all accounts, A and B seem to me to have “the same shape”. They each have an arrow to themselves and to C. So, one would think (from intuition) that they have the “same shape” and are isomorphic. And yet, they do not have an invertible morphism between them.

It is true that the other way around works better: if there is an invertible morphism between two objects Alpha and Beta in a category, composition will cause morphism from Alpha (Beta) to a third object Gamma to imply a morphism from Beta (Alpha) to Gamma, so they end up having “the same shape” (at least in my understanding of shape). But even then, going from the definition to the conclusion that the “same shape” intuition holds takes some thinking. The definition does not support the intuition directly; rather, it implies a theorem that supports the intuition.

Am I missing something?

January 28, 2018 at 8:44 am

The “same shape” intuition comes from outside of category theory. You can tell that, because I’m saying “every part of an object,” which contradicts the idea that categorical objects have no parts. It’s true that isomorphic objects have the same relationship to the rest of the category but, as you correctly observe, the converse is not true.

February 2, 2018 at 12:59 pm

So, since my intuition for “same shape” turned out not to hold water (at least in all cases), I am curious as to what you have in mind when you say “same shape”. This this something that can be precisely defined, or just an imprecise idea? Thanks.

February 3, 2018 at 1:53 am

In essence, the only definition of “same shape” is given by a morphism in category theory. You just build a category in such a way that morphism preserve shape in whatever your starting mathematical theory was. For instance, you first look at all monoids as sets, and define morphism as preserving unit and multiplication. Then you forget that they were sets, and keep morphisms. Voila, you have a category of monoids.

February 3, 2018 at 3:41 am

So you are saying that “same shape” is based on the morphisms alone. And in the post, you said “same shape” is equivalent to isomorphism. But then I gave an example in which two objects have morphism-same-shape but are not isomorphic. So either I don’t understand what you mean by “same shape”, or “same shape” is not completely equivalent to isomorphism.

February 4, 2018 at 9:15 am

The actual definition is a pair of invertible morphisms. Same-shape (which is a literal translation of Greek of iso-morphism) is a useful intuition. Don’t get too attached to it.

February 4, 2018 at 9:38 am

Ok, thanks! 🙂

March 21, 2018 at 11:05 am

Hi Bartosz!

Two things from my side:

1. From my understanding your definition of “initial object” does not agree with this https://en.wikipedia.org/wiki/Initial_and_terminal_objects. What I understand from “The initial object is the object that has one and only one morphism going to any object in the category.” is that there is only one morphism that goes from the initial object to all the others in the category while wikipedia says something different: “an initial object of a category C is an object I in C such that for every object X in C, there exists precisely one morphism I → X”. Makes sense what I’m saying?

2. Regarding the poset example right after the definition of initial object, the related category has one object for each element in the poset? For example, if we consider the set F={1,2,3,4,5}, does the related category have 5 objects? Makes sense in general to have objects that represent only one element of another set?

March 26, 2018 at 6:32 am

September 28, 2018 at 9:15 am

One little question, since the coproduct can have the commute diagram like:

a-> Either a b)->(b->Either a b)->Either a b->c ?

while c is our coproduct,

then why don’t we denote the type notation of the factorizer like:

factorizer::(a->Either a b)->(b->Either a b)->Either a b->c ?

October 23, 2018 at 4:41 pm

I find coproduct in C++ as a tagged union, or as Either in Haskell, to be confusing when compared to a set coproduct which is a disjoint union.

Namely, disjoint union contains both elements while C++ tagged union can have only one at the time, either one or another but never both.

Not sure that is important in this case but does rub me as I’m missing something.

October 29, 2018 at 1:08 pm

I guess I am committing a ‘cardinal sin’ here: looking inside the objects. Instead, I should be looking only at morphisms. It doesn’t matter what disjoint union contains and what C++ tagged union contain, respectively – inclusion morphisms in both cases is what matters.

December 10, 2018 at 4:18 am

I am confused about this sentences: “Neither would the unique morphism from the coproduct to our singleton.”

But the singleton is the terminal object, there must be a unique morphism from the coproduct to our singleton from definition.

Am I misunderstand something? Thanks.

August 18, 2019 at 11:29 am

Thanks for your great series. I just came back from YouTube and I’m starting to read your book now.

This is my solution for Challenge #7

Answer is Yes, int with the two injections i and j is “better” than Either, because there is morphism m factorizes Left and Right

m :: Int -> Either Int Bool

m 0 = Right True

m 1 = Right False

m n = if n < 0 then Left n else Left (n-2)

(m . i) (-1) == Left -1

(m . i) (0) == Left 0

(m . i) (1) == Left 1

(m . j) (True) == Right True

(m . j) (False) == Right False

August 25, 2019 at 12:46 am

Hi Bartosz, Really enjoying the category theory journey. I’m puzzled. A Product c has projection morphisms which de-structure it into components a and b. My intuition was that a Coproduct would show the reverse how c was created in the first place as the combination of a an b. To my surprise, Coproduct shows instead how to create a c to be either an a or a b. What pattern of morphisms allow us to create a c out of both a and b – suitable to be destructured by a pair of projection morphisms?

September 10, 2019 at 5:02 pm

A morphism can have only one source object, so it’s not possible to create a c from both a and b.

November 23, 2019 at 8:01 am

“The composition g∘f must be a morphism from i1 to i1. But i1 is initial so there can only be one morphism going from i1 to i1. Since we are in a category, we know that there is an identity morphism from i1 to i1, and since there is room for only one, that must be it. Therefore g∘f is equal to identity. Similarly, f∘g must be equal to identity, because there can be only one morphism from i2 back to i2.”

It seems like logically the followup sentence “Therefore any two initial objects are isomorphic” actually should read “Therefore there is only one initial object” and so the diagram of the two isomorphic objects is wrong – it doesn’t match the category.

Perhaps the problem here is that you never defined what is meant by “up to”. I had the same problem with my category theory instructor, who refused to define “up to”. Long story short, I was forced to withdraw from the course.

November 23, 2019 at 9:23 am

“Unique up to isomorphism” means that, if there is more than one, they must be isomorphic. The simplest example of a category with two initial objects is a category with two objects a and b, and two non-identity morphisms f :: a -> b and g :: b -> a, with g being the inverse of f (f . g = id_b, g . f = id_a). Check that a is an initial object and b is an initial object. And they are indeed isomorphic.

October 2, 2020 at 4:18 am

in the line “Since m () is the element of the product picked by m, these equations tell use that the element picked by p from the first set, p ()”

it should be us instead of use

October 2, 2020 at 8:09 am

Done! Thanks!

November 25, 2020 at 6:28 am

I don’t quite get the ranking thing. How can I decide whether an m has a higher rank than an m’? In your example you prove that the (Int, Bool) is a “better” product than just Int, because there is the m from Int to (Int, Bool), and p and q can be constructed from it.

But I can easily find an m’ from (Int, Bool) to Int and construct “fst” and “snd” using it:

m’ (x, _) = x

fst = p . m’

snd = q . m’ (which will be wrong half of the times)

So just by looking at category with m and m’, how can I tell which is better: m or m’?

April 5, 2021 at 8:25 pm

[…] functional programming, the fundamental building blocks of data types are products and coproducts. These are collectively referred to as Algebraic Data Types (ADTs) — a functional programming […]

September 22, 2022 at 6:07 am

Hi Bartosz, Thanks for the great content. I had a doubt in product lecture. While defining Products, we ranked how one object is better candidate than other object for a product by universal construction. However, the actual product c can have different morphisms to a and b let’s say p’::c->a and q’::c->b that is not actually the “fst::c->a” and “snd::c->b”. Now how do we rank that fst and snd are the best candidates in morphisms not some other pair p’:: c -> a and q’::c->b. Thanks.

September 22, 2022 at 7:46 am

We just show that there is a unique h from a x b back to a x b that factorizes these p’ and q’ through fst and snd.

September 23, 2022 at 5:52 am

I may be wrong, but I am going through some examples of set and functions category just for clear understanding. (This is regarding Product doubt I had in previous comment)

Let’s assume a = Int, b = Bool

Then a x b = (Int, Bool)

fst :: (Int, Bool) -> Int

fst (x, _) = x

snd :: (Int, Bool) -> Bool

snd (_, x) = x

Let’s have p’ and q’ as:

p’ :: (Int, Bool) -> Int

p’ (x, _) = x + 1

q’ :: (Int, Bool) -> Int

q’ (_, x) = !x

Now, we can have m that prime factorises p’ and q’

m :: (Int, Bool) -> Int

m (x, y) = (x + 1, !y)

And clearly p’ = fst . m

And q’ = snd . m

That suggests fst and snd are better

However, I also have a mapping m’ that prime factorises fst and snd

m’ :: (Int, Bool) -> Int

m’ (x, y) = (x – 1, !y)

Here clearly fst = p’ . m’

And snd = q’ . m’

So, m prime factorises p’ and q’

And m’ prime factorises fst and snd

So, none of (fst, snd) and (p’ and q’) should be better candidate right? Or am I missing something here?

Thank You

September 23, 2022 at 8:56 am

You are correct. Notice that m’ is the inverse of m, so it’s an isomorphism. There are many isomorphic products of a and b (for instance, (b, a) is isomorphic to (a, b)). The ones you are comparing happen to have the same data (Int, Bool), but they have different projections.

July 19, 2023 at 8:45 pm

For the last challenge, that is, there are multiple

m's that make:which:

i :: Int -> Either Int Bool

i = Left

j :: Bool -> Either Int Bool

j = Right

But I can’t figure out how to have more than one

m', can you give a hint? Thanks.July 20, 2023 at 4:20 am

Try something like:

September 22, 2023 at 1:16 am

When you said this:

Did you mean the category of sets and functions? (If you didn’t then I don’t understand this bit.)

September 22, 2023 at 5:13 am

Yes, I meant the category called Set, in which functions are morphisms. But you’re right, you can also build a category where set inclusions are the morphisms.

December 5, 2023 at 8:48 am

Thanks for the series! It’s awesome. Some questions about Assymetry section: “It’s the relationship of the singleton set to the product that sets it apart from the coproduct.” What type of relationship? In section we can see that unit can be consider as product and there’s m that factorise p and q, what’s the point of this statement, not clear for me.