Functors from a monoidal category C to Set form a monoidal category with Day convolution as product. A monoid in this category is a lax monoidal functor. We define an initial algebra using a higher order functor and show that it corresponds to a free lax monoidal functor.

Recently I’ve been obsessing over monoidal functors. I have already written two blog posts, one about free monoidal functors and one about free monoidal profunctors. I followed some ideas from category theory but, being a programmer, I leaned more towards writing code than being preoccupied with mathematical rigor. That left me longing for more elegant proofs of the kind I’ve seen in mathematical literature.

I believe that there isn’t that much difference between programming and math. There is a whole spectrum of abstractions ranging from assembly language, weakly typed languages, strongly typed languages, functional programming, set theory, type theory, category theory, and homotopy type theory. Each language comes with its own bag of tricks. Even within one language one starts with some relatively low level encodings and, with experience, progresses towards higher abstractions. I’ve seen it in Haskell, where I started by hand coding recursive functions, only to realize that I can be more productive using bulk operations on types, then building recursive data structures and applying recursive schemes, eventually diving into categories of functors and profunctors.

I’ve been collecting my own bag of mathematical tricks, mostly by reading papers and, more recently, talking to mathematicians. I’ve found that mathematicians are happy to share their knowledge even with outsiders like me. So when I got stuck trying to clean up my monoidal functor code, I reached out to Emily Riehl, who forwarded my query to Alexander Campbell from the Centre for Australian Category Theory. Alex’s answer was a very elegant proof of what I was clumsily trying to show in my previous posts. In this blog post I will explain his approach. I should also mention that most of the results presented in this post have already been covered in a comprehensive paper by Rivas and Jaskelioff, Notions of Computation as Monoids.

Lax Monoidal Functors

To properly state the problem, I’ll have to start with a lot of preliminaries. This will require some prior knowledge of category theory, all within the scope of my blog/book.

We start with a monoidal category , that is a category in which you can “multiply” objects using some kind of a tensor product

. For any pair of objects

and

there is an object

; and this mapping is functorial in both arguments (that is, you can also “multiply” morphisms). A monoidal category will also have a special object

that is the unit of multiplication. In general, the unit and associativity laws are satisfied up to isomorphism:

These isomorphisms are called, respectively, the left and right unitors, and the associator.

The most familiar example of a monoidal category is the category of types and functions, in which the tensor product is the cartesian product (pair type) and the unit is the unit type ().

Let’s now consider functors from to the category of sets,

. These functors also form a category called

, in which morphisms between any two functors are natural transformations.

In Haskell, a natural transformation is approximated by a polymorphic function:

type f ~> g = forall x. f x -> g x

The category is monoidal, with cartesian product

serving as a tensor product, and the singleton set

as the unit.

We are interested in functors in that preserve the monoidal structure. Such a functor should map the tensor product in

to the cartesian product in

and the unit

to the singleton set

. Accordingly, a strong monoidal functor

comes with two isomorphisms:

We are interested in a weaker version of a monoidal functor called lax monoidal functor, which is equipped with a one-way natural transformation:

and a one-way morphism:

A lax monoidal functor must also preserve unit and associativity laws.

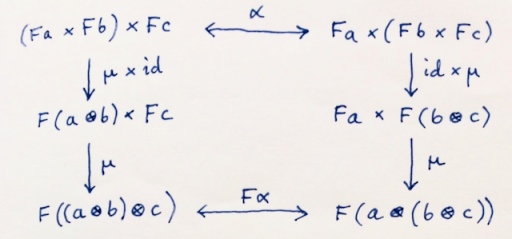

Associativity law: is the associator in the appropriate category (top arrow, in Set; bottom arrow, in C).

In Haskell, a lax monoidal functor can be defined as:

class Monoidal f where eta :: () -> f () mu :: (f a, f b) -> f (a, b)

It’s also known as the applicative functor.

Day Convolution and Monoidal Functors

It turns out that our category of functors is also equipped with monoidal structure. Two functors

and

can be “multiplied” using Day convolution:

Here, is the hom-set, or the set of morphisms from

to

. The integral sign stands for a coend, which can be interpreted as a generalization of an (infinite) coproduct (modulo some identifications). An element of this coend can be constructed by injecting a triple consisting of a morphism from

, an element of the set

, and an element of the set

, for some

and

.

In Haskell, a coend corresponds to an existential type, so the Day convolution can be defined as:

data Day f g c where Day :: ((a, b) -> c, f a, g b) -> Day f g c

(The actual definition uses currying.)

The unit with respect to Day convolution is the hom-functor:

which assigns to every object the set of morphisms

and acts on morphisms by post-composition.

The proof that this is the unit is instructive, as it uses the standard trick: the co-Yoneda lemma. In the coend form, the co-Yoneda lemma reads, for a covariant functor :

and for a contravariant functor :

(The mnemonics is that the integration variable must appear twice, once in the negative, and once in the positive position. An argument to a contravariant functor is in a negative position.)

Indeed, substituting for the first functor in Day convolution produces:

which can be “integrated” over using the Yoneda lemma to yield:

and, since is the unit of the tensor product, this can be further “integrated” over

to give

. The right unit law is analogous.

To summarize, we are dealing with three monoidal categories: with the tensor product

and unit

,

with the cartesian product and singleton

, and a functor category

with Day convolution and unit

.

A Monoid in [C, Set]

A monoidal category can be used to define monoids. A monoid is an object equipped with two morphisms — unit and multiplication:

These morphisms must satisfy unit and associativity conditions, which are best illustrated using commuting diagrams.

Unit laws. λ and ρ are the unitors.

Associativity law: α is the associator.

This definition of a monoid can be translated directly to Haskell:

class Monoid m where eta :: () -> m mu :: (m, m) -> m

It so happens that a lax monoidal functor is exactly a monoid in our functor category . Since objects in this category are functors, a monoid is a functor

equipped with two natural transformations:

At first sight, these don’t look like the morphisms in the definition of a lax monoidal functor. We need some new tricks to show the equivalence.

Let’s start with the unit. The first trick is to consider not one natural transformation but the whole hom-set:

The set of natural transformations can be represented as an end (which, incidentally, corresponds to the forall quantifier in the Haskell definition of natural transformations):

The next trick is to use the Yoneda lemma which, in the end form reads:

In more familiar terms, this formula asserts that the set of natural transformations from the hom-functor to

is isomorphic to

.

There is also a version of the Yoneda lemma for contravariant functors:

The application of Yoneda to our formula produces , which is in one-to-one correspondence with morphisms

.

We can use the same trick of bundling up natural transformations that define multiplication .

and representing this set as an end over the hom-functor:

Expanding the definition of Day convolution, we get:

The next trick is to pull the coend out of the hom-set. This trick relies on the co-continuity of the hom-functor in the first argument: a hom-functor from a colimit is isomorphic to a limit of hom-functors. In programmer-speak: a function from a sum type is equivalent to a product of functions (we call it case analysis). A coend is a generalized colimit, so when we pull it out of a hom-functor, it turns into a limit, or an end. Here’s the general formula, in which is an arbitrary profunctor:

Let’s apply it to our formula:

We can combine the ends under one integral sign (it’s allowed by the Fubini theorem) and move to the next trick: hom-set adjunction:

In programming this is known as currying. This adjunction exists because is a cartesian closed category. We’ll use this adjunction to move

to the right:

Using the Yoneda lemma we can “perform the integration” over to get:

This is exactly the set of natural transformations used in the definition of a lax monoidal functor. We have established one-to-one correspondence between monoidal multiplication and lax monoidal mapping.

Of course, a complete proof would require translating monoid laws to their lax monoidal counterparts. You can find more details in Rivas and Jaskelioff, Notions of Computation as Monoids.

We’ll use the fact that a monoid in the category is a lax monoidal functor later.

Alternative Derivation

Incidentally, there are shorter derivations of these formulas that use the trick borrowed from the proof of the Yoneda lemma, namely, evaluating things at the identity morphism. (Whenever mathematicians speak of Yoneda-like arguments, this is what they mean.)

Starting from and plugging in the Day convolution formula, we get:

There is a component of this natural transformation at that is the morphism:

This morphism must be defined for all possible values of the coend. In particular, it must be defined for the triple , giving us the

we seek.

There is also an alternative derivation for the unit: Take the component of the natural transformation at

:

is guaranteed to contain at least one element, the identity morphism

. We can use

as the (only) value of the lax monoidal constraint at the singleton

.

Free Monoid

Given a monoidal category , we might be able to define a whole lot of monoids in it. These monoids form a category

. Morphisms in this category correspond to those morphisms in

that preserve monoidal structure.

Consider, for instance, two monoids and

. A monoid morphism is a morphism

in

such that the unit of

is related to the unit of

:

and similarly for multiplication:

Remember, we assumed that the tensor product is functorial in both arguments, so it can be used to lift a pair of morphisms.

There is an obvious forgetful functor from

to

which, for every monoid, picks its underlying object in

and maps every monoid morphism to its underlying morphism in

.

The left adjoint to this functor, if it exists, will map an object in

to a free monoid

.

The intuition is that a free monoid is a list of

.

In Haskell, a list is defined recursively:

data List a = Nil | Cons a (List a)

Such a recursive definition can be formalized as a fixed point of a functor. For a list of a, this functor is:

data ListF a x = NilF | ConsF a x

Notice the peculiar structure of this functor. It’s a sum type: The first part is a singleton, which is isomorphic to the unit type (). The second part is a product of a and x. Since the unit type is the unit of the product in our monoidal category of types, we can rewrite this functor symbolically as:

It turns out that this formula works in any monoidal category that has finite coproducts (sums) that are preserved by the tensor product. The fixed point of this functor is the free functor that generates free monoids.

I’ll define what is meant by the fixed point and prove that it defines a monoid. The proof that it’s the result of a free/forgetful adjunction is a bit involved, so I’ll leave it for a future blog post.

Algebras

Let’s consider algebras for the functor . Such an algebra is defined as an object

called the carrier, and a morphism:

called the structure map or the evaluator.

In Haskell, an algebra is defined as:

type Algebra f x = f x -> x

There may be a lot of algebras for a given functor. In fact there is a whole category of them. We define an algebra morphism between two algebras and

as a morphism

which commutes with the two structure maps:

The initial object in the category of algebras is called the initial algebra, or the fixed point of the functor that generates these algebras. As the initial object, it has a unique algebra morphism to any other algebra. This unique morphism is called a catamorphism.

In Haskell, the fixed point of a functor f is defined recursively:

newtype Fix f = In { out :: f (Fix f) }

with, for instance:

type List a = Fix (ListF a)

A catamorphism is defined as:

cata :: Functor f => Algebra f a -> Fix f -> a cata alg = alg . fmap (cata alg) . out

A list catamorphism is called foldr.

We want to show that the initial algebra of the functor:

is a free monoid. Let’s see under what conditions it is a monoid.

Initial Algebra is a Monoid

In this section I will show you how to concatenate lists the hard way.

We know that function type (a.k.a., the exponential

) is the right adjoint to the product:

The function type is also called the internal hom.

In a monoidal category it’s sometimes possible to define an internal hom-object, denoted , as the right adjoint to the tensor product:

If this adjoint exists, the category is called closed monoidal.

In a closed monoidal category, the initial algebra of the functor

is a monoid. (In particular, a Haskell list of

a, which is a fixed point of ListF a, is a monoid.)

To show that, we have to construct two morphisms corresponding to unit and multiplication (in Haskell, empty list and concatenation):

What we know is that is a carrier of the initial algebra for

, so it is equipped with the structure map:

which is equivalent to a pair of morphisms:

Notice that, in Haskell, these correspond the two list constructors: Nil and Cons or, in terms of the fixed point:

nil :: () -> List a nil () = In NilF cons :: a -> List a -> List a cons a as = In (ConsF a as)

We can immediately use to implement

.

The second one, , one can be rewritten using the hom adjuncion as:

Notice that, if we could prove that is a carrier for the same algebra generated by

, we would know that there is a unique catamorphism from the initial algebra

:

which, by the hom adjunction, would give us the desired multiplication:

Let’s establish some useful lemmas first.

Lemma 1: For any object in a closed monoidal category,

is a monoid.

This is a generalization of the idea that endomorphisms form a monoid, in which identity morphism is the unit and composition is multiplication. Here, the internal hom-object generalizes the set of endomorphisms.

Proof: The unit:

follows, through adjunction, from the unit law in the monoidal category:

(In Haskell, this is a fancy way of writing mempty = id.)

Multiplication takes the form:

which is reminiscent of composition of edomorphisms. In Haskell we would say:

mappend = (.)

By adjunction, we get:

We have at our disposal the counit of the adjunction:

We can apply it twice to get:

In Haskell, we could express this as:

mu :: ((x -> x), (x -> x)) -> (x -> x) mu (f, g) = \x -> f (g x)

Here, the counit of the adjunction turns into simple function application.

Lemma 2: For every morphism , where

is a monoid, we can construct an algebra of the functor

with

as its carrier.

Proof: Since is a monoid, we have two morphisms:

To show that is a carrier of our algebra, we need two morphisms:

The first one is the same as , the second can be implemented as:

In Haskell, we would do case analysis:

mapAlg :: Monoid m => ListF a m -> m mapAlg NilF = mempty mapAlg (ConsF a m) = f a `mappend` m

We can now build a larger proof. By lemma 1, is a monoid with:

We also have a morphism so, by lemma 2,

is also a carrier for the algebra:

It follows that there is a unique catamorphism from the initial algebra

to it, and we know how to use it to implement monoidal multiplication for

. Therefore,

is a monoid.

Translating this to Haskell, is the curried form of

Cons and what we have shown is that concatenation (multiplication of lists) can be implemented as a catamorphism:

concat :: List a -> List a -> List a

conc x y = cata alg x y

where alg NilF = id

alg (ConsF a t) = (cons a) . t

The type:

List a -> (List a -> List a)

(parentheses added for emphasis) corresponds to .

It’s interesting that concatenation can be described in terms of the monoid of list endomorphisms. Think of turning an element a of the list into a transformation, which prepends this element to its argument (that’s what does). These transformations form a monoid. We have an algebra that turns the unit

into an identity transformation on lists, and a pair

(where

is a list transformation) into the composite

. The catamorphism for this algebra takes a list

and turns it into one composite list transformation. We then apply this transformation to another list and get the final result: the concatenation of two lists.

Incidentally, lemma 2 also works in reverse: If a monoid is a carrier of the algebra of

, then there is a morphism

. This morphism can be thought of as inserting generators represented by

into the monoid

.

Proof: if is both a monoid and a carrier for the algebra

, we can construct the morphism

by first applying the identity law to go from

to

, then apply

to get

. This can be right-injected into the coproduct

and then evaluated down to

using the structure map for the algebra on

.

In Haskell, this corresponds to a construction and evaluation of:

ConsF a mempty

Free Monoidal Functor

Let’s go back to our functor category. We started with a monoidal category and considered a functor category

. We have shown that

is itself a monoidal category with Day convolution as tensor product and the hom functor

as unit. A monoid is this category is a lax monoidal functor.

The next step is to build a free monoid in , which would give us a free lax monoidal functor. We have just seen such a construction in an arbitrary closed monoidal category. We just have to translate it to

. We do this by replacing objects with functors and morphisms with natural transformations.

Our construction relied on defining an initial algebra for the functor:

Straightforward translation of this formula to the functor category produces a higher order endofunctor:

It defines, for any functor , a mapping from a functor

to a functor

. (It also maps natural transformations.)

We can now use to define (higher-order) algebras. An algebra consists of a carrier — here, a functor

— and a structure map — here, a natural transformation:

The initial algebra for this higher-order endofunctor defines a monoid, and therefore a lax monoidal functor. We have shown it for an arbitrary closed monoidal category. So the only question is whether our functor category with Day convolution is closed.

We want to define the internal hom-object in that satisfies the adjunction:

We start with the set of natural transformations — the hom-set in :

We rewrite it as an end over , and use the formula for Day convolution:

We use the co-continuity trick to pull the coend out of the hom-set and turn it into an end:

Keeping in mind that our goal is to end up with on the left, we use the regular hom-set adjunction to shuffle the other two terms to the right:

The hom-functor is continuous in the second argument, so we can sneak the end over under it:

We end up with a set of natural transformations from the functor to the functor we will call:

We therefore identify this functor as the right adjoint (internal hom-object) for Day convolution. We can further simplify it by using the hom-set adjunction:

and applying the Yoneda lemma to get:

In Haskell, we would write it as:

newtype DayHom f g a = DayHom (forall b . f b -> g (a, b))

Since Day convolution has a right adjoint, we conclude that the fixed point of our higher order functor defines a free lax monoidal functor. We can write it in a recursive form as:

or, in Haskell:

data FreeMonR f t =

Done t

| More (Day f (FreeMonR f) t)

Free Monad

This blog post wouldn’t be complete without mentioning that the same construction works for monads. Famously, a monad is a monoid in the category of endofunctors. Endofunctors form a monoidal category with functor composition as tensor product and the identity functor as unit. The fact that we can construct a free monad using the formula:

is due to the observation that functor composition has a right adjoint, which is the right Kan extension. Unfortunately, due to size issues, this Kan extension doesn’t always exist. I’ll quote Alex Campbell here: “By making suitable size restrictions, we can give conditions for free monads to exist: for example, free monads exist for accessible endofunctors on locally presentable categories; a special case is that free monads exist for finitary endofunctors on , where finitary means the endofunctor preserves filtered colimits (more generally, an endofunctor is accessible if it preserves

-filtered colimits for some regular cardinal number

).”

Conclusion

As we acquire experience in programming, we learn more tricks of trade. A seasoned programmer knows how to read a file, parse its contents, or sort an array. In category theory we use a different bag of tricks. We bunch morphisms into hom-sets, move ends and coends, use Yoneda to “integrate,” use adjunctions to shuffle things around, and use initial algebras to define recursive types.

Results derived in category theory can be translated to definitions of functions or data structures in programming languages. A lax monoidal functor becomes an Applicative. Free monoidal functor becomes:

data FreeMonR f t =

Done t

| More (Day f (FreeMonR f) t)

What’s more, since the derivation made very few assumptions about the category (other than that it’s monoidal), this result can be immediately applied to profunctors (replacing

with

) to produce:

data FreeMon p s t where

DoneFM :: t -> FreeMon p s t

MoreFM :: p a b -> FreeMon p c d ->

(b -> d -> t) ->

(s -> (a, c)) -> FreeMon p s t

Replacing Day convolution with endofunctor composition gives us a free monad:

data FreeMonadF f g a =

DoneFM a

| MoreFM (Compose f g a)

Category theory is also the source of laws (commuting diagrams) that can be used in equational reasoning to verify the correctness of programming constructs.

Writing this post has been a great learning experience. Every time I got stuck, I would ask Alex for help, and he would immediately come up with yet another algebra and yet another catamorphism. This was so different from the approach I would normally take, which would be to get bogged down in inductive proofs over recursive data structures.