This is part 18 of Categories for Programmers. Previously: It’s All About Morphisms. See the Table of Contents.

In mathematics we have various ways of saying that one thing is like another. The strictest is equality. Two things are equal if there is no way to distinguish one from another. One can be substituted for the other in every imaginable context. For instance, did you notice that we used equality of morphisms every time we talked about commuting diagrams? That’s because morphisms form a set (hom-set) and set elements can be compared for equality.

But equality is often too strong. There are many examples of things being the same for all intents and purposes, without actually being equal. For instance, the pair type (Bool, Char) is not strictly equal to (Char, Bool), but we understand that they contain the same information. This concept is best captured by an isomorphism between two types — a morphism that’s invertible. Since it’s a morphism, it preserves the structure; and being “iso” means that it’s part of a round trip that lands you in the same spot, no matter on which side you start. In the case of pairs, this isomorphism is called swap:

swap :: (a,b) -> (b,a) swap (a,b) = (b,a)

swap happens to be its own inverse.

Adjunction and Unit/Counit Pair

When we talk about categories being isomorphic, we express this in terms of mappings between categories, a.k.a. functors. We would like to be able to say that two categories C and D are isomorphic if there exists a functor R (“right”) from C to D, which is invertible. In other words, there exists another functor L (“left”) from D back to C which, when composed with R, is equal to the identity functor I. There are two possible compositions, R ∘ L and L ∘ R; and two possible identity functors: one in C and another in D.

But here’s the tricky part: What does it mean for two functors to be equal? What do we mean by this equality:

R ∘ L = ID

or this one:

L ∘ R = IC

It would be reasonable to define functor equality in terms of equality of objects. Two functors, when acting on equal objects, should produce equal objects. But we don’t, in general, have the notion of object equality in an arbitrary category. It’s just not part of the definition. (Going deeper into this rabbit hole of “what equality really is,” we would end up in Homotopy Type Theory.)

You might argue that functors are morphisms in the category of categories, so they should be equality-comparable. And indeed, as long as we are talking about small categories, where objects form a set, we can indeed use the equality of elements of a set to equality-compare objects.

But, remember, Cat is really a 2-category. Hom-sets in a 2-category have additional structure — there are 2-morphisms acting between 1-morphisms. In Cat, 1-morphisms are functors, and 2-morphisms are natural transformations. So it’s more natural (can’t avoid this pun!) to consider natural isomorphisms as substitutes for equality when talking about functors.

So, instead of isomorphism of categories, it makes sense to consider a more general notion of equivalence. Two categories C and D are equivalent if we can find two functors going back and forth between them, whose composition (either way) is naturally isomorphic to the identity functor. In other words, there is a two-way natural transformation between the composition R ∘ L and the identity functor ID, and another between L ∘ R and the identity functor IC.

Adjunction is even weaker than equivalence, because it doesn’t require that the composition of the two functors be isomorphic to the identity functor. Instead it stipulates the existence of a one way natural transformation from ID to R∘L, and another from L∘R to IC. Here are the signatures of these two natural transformations:

η :: ID -> R ∘ L ε :: L ∘ R -> IC

η is called the unit, and ε the counit of the adjunction.

Notice the asymmetry between these two definitions. In general, we don’t have the two remaining mappings:

R ∘ L -> ID -- not necessarily IC -> L ∘ R -- not necessarily

Because of this asymmetry, the functor L is called the left adjoint to the functor R, while the functor R is the right adjoint to L. (Of course, left and right make sense only if you draw your diagrams one particular way.)

The compact notation for the adjunction is:

L ⊣ R

To better understand the adjunction, let’s analyze the unit and the counit in more detail.

Let’s start with the unit. It’s a natural transformation, so it’s a family of morphisms. Given an object d in D, the component of η is a morphism between I d, which is equal to d, and (R ∘ L) d; which, in the picture, is called d':

ηd :: d -> (R ∘ L) d

Notice that the composition R∘L is an endofunctor in D.

This equation tells us that we can pick any object d in D as our starting point, and use the round trip functor R ∘ L to pick our target object d'. Then we shoot an arrow — the morphism ηd — to our target.

By the same token, the component of of the counit ε can be described as:

εc :: (L ∘ R) c -> c

It tells us that we can pick any object c in C as our target, and use the round trip functor L ∘ R to pick the source c' = (L ∘ R) c. Then we shoot the arrow — the morphism εc — from the source to the target.

Another way of looking at unit and counit is that unit lets us introduce the composition R ∘ L anywhere we could insert an identity functor on D; and counit lets us eliminate the composition L ∘ R, replacing it with the identity on C. That leads to some “obvious” consistency conditions, which make sure that introduction followed by elimination doesn’t change anything:

L = L ∘ ID -> L ∘ R ∘ L -> IC ∘ L = L

R = ID ∘ R -> R ∘ L ∘ R -> R ∘ IC = R

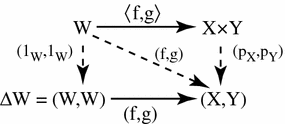

These are called triangular identities because they make the following diagrams commute:

These are diagrams in the functor category: the arrows are natural transformations, and their composition is the horizontal composition of natural transformations. In components, these identities become:

ε L d ∘ L η d = id L d R ε c ∘ η R c = id R c

We often see unit and counit in Haskell under different names. Unit is known as return (or pure, in the definition of Applicative):

return :: d -> m d

and counint as extract:

extract :: w c -> c

Here, m is the (endo-) functor corresponding to R∘L, and w is the (endo-) functor corresponding to L∘R. As we’ll see later, they are part of the definition of a monad and a comonad, respectively.

If you think of an endofunctor as a container, the unit (or return) is a polymorphic function that creates a default box around a value of arbitrary type. The counit (or extract) does the reverse: it retrieves or produces a single value from a container.

We’ll see later that every pair of adjoint functors defines a monad and a comonad. Conversely, every monad or comonad may be factorized into a pair of adjoint functors — this factorization is not unique, though.

In Haskell, we use monads a lot, but only rarely factorize them into pairs of adjoint functors, primarily because those functors would normally take us out of Hask.

We can however define adjunctions of endofunctors in Haskell. Here’s part of the definition taken from Data.Functor.Adjunction:

class (Functor f, Representable u) =>

Adjunction f u | f -> u, u -> f where

unit :: a -> u (f a)

counit :: f (u a) -> a

This definition requires some explanation. First of all, it describes a multi-parameter type class — the two parameters being f and u. It establishes a relation called Adjunction between these two type constructors.

Additional conditions, after the vertical bar, specify functional dependencies. For instance, f -> u means that u is determined by f (the relation between f and u is a function, here on type constructors). Conversely, u -> f means that, if we know u, then f is uniquely determined.

I’ll explain in a moment why, in Haskell, we can impose the condition that the right adjoint u be a representable functor.

Adjunctions and Hom-Sets

There is an equivalent definition of the adjunction in terms of natural isomorphisms of hom-sets. This definition ties nicely with universal constructions we’ve been studying so far. Every time you hear the statement that there is some unique morphism, which factorizes some construction, you should think of it as a mapping of some set to a hom-set. That’s the meaning of “picking a unique morphism.”

Furthermore, factorization can be often described in terms of natural transformations. Factorization involves commuting diagrams — some morphism being equal to a composition of two morphisms (factors). A natural transformation maps morphisms to commuting diagrams. So, in a universal construction, we go from a morphism to a commuting diagram, and then to a unique morphism. We end up with a mapping from morphism to morphism, or from one hom-set to another (usually in different categories). If this mapping is invertible, and if it can be naturally extended across all hom-sets, we have an adjunction.

The main difference between universal constructions and adjunctions is that the latter are defined globally — for all hom-sets. For instance, using a universal construction you can define a product of two select objects, even if it doesn’t exist for any other pair of objects in that category. As we’ll see soon, if the product of any pair of objects exists in a category, it can be also defined through an adjunction.

Here’s the alternative definition of the adjunction using hom-sets. As before, we have two functors L :: D->C and R :: C->D. We pick two arbitrary objects: the source object d in D, and the target object c in C. We can map the source object d to C using L. Now we have two objects in C, L d and c. They define a hom-set:

C(L d, c)

Similarly, we can map the target object c using R. Now we have two objects in D, d and R c. They, too, define a hom set:

D(d, R c)

We say that L is left adjoint to R iff there is an isomorphism of hom sets:

C(L d, c) ≅ D(d, R c)

that is natural both in d and c.

Naturality means that the source d can be varied smoothly across D; and the target c, across C. More precisely, we have a natural transformation φ between the following two (covariant) functors from C to Set. Here’s the action of these functors on objects:

c -> C(L d, c) c -> D(d, R c)

The other natural transformation, ψ, acts between the following (contravariant) functors:

d -> C(L d, c) d -> D(d, R c)

Both natural transformations must be invertible.

It’s easy to show that the two definitions of the adjunction are equivalent. For instance, let’s derive the unit transformation starting from the isomorphism of hom-sets:

C(L d, c) ≅ D(d, R c)

Since this isomorphism works for any object c, it must also work for c = L d:

C(L d, L d) ≅ D(d, (R ∘ L) d)

We know that the left hand side must contain at least one morphism, the identity. The natural transformation will map this morphism to an element of D(d, (R ∘ L) d) or, inserting the identity functor I, a morphism in:

D(I d, (R ∘ L) d)

We get a family of morphisms parameterized by d. They form a natural transformation between the functor I and the functor R ∘ L (the naturality condition is easy to verify). This is exactly our unit, η.

Conversely, starting from the existence of the unit and co-unit, we can define the transformations between hom-sets. For instance, let’s pick an arbitrary morphism f in the hom-set C(L d, c). We want to define a φ that, acting on f, produces a morphism in D(d, R c).

There isn’t really much choice. One thing we can try is to lift f using R. That will produce a morphism R f from R (L d) to R c — a morphism that’s an element of D((R ∘ L) d, R c).

What we need for a component of φ, is a morphism from d to R c. That’s not a problem, since we can use a component of ηd to get from d to (R ∘ L) d. We get:

φf = R f ∘ ηd

The other direction is analogous, and so is the derivation of ψ.

Going back to the Haskell definition of Adjunction, the natural transformations φ and ψ are replaced by polymorphic (in a and b) functions leftAdjunct and rightAdjunct, respectively. The functors L and R are called f and u:

class (Functor f, Representable u) =>

Adjunction f u | f -> u, u -> f where

leftAdjunct :: (f a -> b) -> (a -> u b)

rightAdjunct :: (a -> u b) -> (f a -> b)

The equivalence between the unit/counit formulation and the leftAdjunct/rightAdjunct formulation is witnessed by these mappings:

unit = leftAdjunct id counit = rightAdjunct id leftAdjunct f = fmap f . unit rightAdjunct f = counit . fmap f

It’s very instructive to follow the translation from the categorical description of the adjunction to Haskell code. I highly encourage this as an exercise.

We are now ready to explain why, in Haskell, the right adjoint is automatically a representable functor. The reason for this is that, to the first approximation, we can treat the category of Haskell types as the category of sets.

When the right category D is Set, the right adjoint R is a functor from C to Set. Such a functor is representable if we can find an object rep in C such that the hom-functor C(rep, _) is naturally isomorphic to R. It turns out that, if R is the right adjoint of some functor L from Set to C, such an object always exists — it’s the image of the singleton set () under L:

rep = L ()

Indeed, the adjunction tells us that the following two hom-sets are naturally isomorphic:

C(L (), c) ≅ Set((), R c)

For a given c, the right hand side is the set of functions from the singleton set () to R c. We’ve seen earlier that each such function picks one element from the set R c. The set of such functions is isomorphic to the set R c. So we have:

C(L (), -) ≅ R

which shows that R is indeed representable.

Product from Adjunction

We have previously introduced several concepts using universal constructions. Many of those concepts, when defined globally, are easier to express using adjunctions. The simplest non-trivial example is that of the product. The gist of the universal construction of the product is the ability to factorize any product-like candidate through the universal product.

More precisely, the product of two objects a and b is the object (a × b) (or (a, b) in the Haskell notation) equipped with two morphisms fst and snd such that, for any other candidate c equipped with two morphisms p::c->a and q::c->b, there exists a unique morphism m::c->(a, b) that factorizes p and q through fst and snd.

As we’ve seen earlier, in Haskell, we can implement a factorizer that generates this morphism from the two projections:

factorizer :: (c -> a) -> (c -> b) -> (c -> (a, b)) factorizer p q = \x -> (p x, q x)

It’s easy to verify that the factorization conditions hold:

fst . factorizer p q = p snd . factorizer p q = q

We have a mapping that takes a pair of morphisms p and q and produces another morphism m = factorizer p q.

How can we translate this into a mapping between two hom-sets that we need to define an adjunction? The trick is to go outside of Hask and treat the pair of morphisms as a single morphism in the product category.

Let me remind you what a product category is. Take two arbitrary categories C and D. The objects in the product category C×D are pairs of objects, one from C and one from D. The morphisms are pairs of morphisms, one from C and one from D.

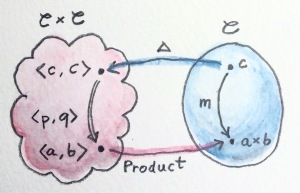

To define a product in some category C, we should start with the product category C×C. Pairs of morphism from C are single morphisms in the product category C×C.

It might be a little confusing at first that we are using a product category to define a product. These are, however, very different products. We don’t need a universal construction to define a product category. All we need is the notion of a pair of objects and a pair of morphisms.

However, a pair of objects from C is not an object in C. It’s an object in a different category, C×C. We can write the pair formally as <a, b>, where a and b are objects of C. The universal construction, on the other hand, is necessary in order to define the object a×b (or (a, b) in Haskell), which is an object in the same category C. This object is supposed to represent the pair <a, b> in a way specified by the universal construction. It doesn’t always exist and, even if it exists for some, might not exist for other pairs of objects in C.

Let’s now look at the factorizer as a mapping of hom-sets. The first hom-set is in the product category C×C, and the second is in C. A general morphism in C×C would be a pair of morphisms <f, g>:

f :: c' -> a g :: c'' -> b

with c'' potentially different from c'. But to define a product, we are interested in a special morphism in C×C, the pair p and q that share the same source object c. That’s okay: In the definition of an adjunction, the source of the left hom-set is not an arbitrary object — it’s the result of the left functor L acting on some object from the right category. The functor that fits the bill is easy to guess — it’s the diagonal functor from C to C×C, whose action on objects is:

Δ c = <c, c>

The left-hand side hom-set in our adjunction should thus be:

(C×C)(Δ c, <a, b>)

It’s a hom-set in the product category. Its elements are pairs of morphisms that we recognize as the arguments to our factorizer:

(c -> a) -> (c -> b) ...

The right-hand side hom-set lives in C, and it goes between the source object c and the result of some functor R acting on the target object in C×C. That’s the functor that maps the pair <a, b> to our product object, a×b. We recognize this element of the hom-set as the result of the factorizer:

... -> (c -> (a, b))

We still don’t have a full adjunction. For that we first need our factorizer to be invertible — we are building an isomorphism between hom-sets. The inverse of the factorizer should start from a morphism m — a morphism from some object c to the product object a×b. In other words, m should be an element of:

C(c, a×b)

The inverse factorizer should map m to a morphism <p, q> in C×C that goes from <c, c> to <a, b>; in other words, a morphism that’s an element of:

(C×C)(Δ c, <a, b>)

If that mapping exists, we conclude that there exists the right adjoint to the diagonal functor. That functor defines a product.

In Haskell, we can always construct the inverse of the factorizer by composing m with, respectively, fst and snd.

p = fst ∘ m q = snd ∘ m

To complete the proof of the equivalence of the two ways of defining a product we also need to show that the mapping between hom-sets is natural in a, b, and c. I will leave this as an exercise for the dedicated reader.

To summarize what we have done: A categorical product may be defined globally as the right adjoint of the diagonal functor:

(C × C)(Δ c, <a, b>) ≅ C(c, a×b)

Here, a×b is the result of the action of our right adjoint functor Product on the pair <a, b>. Notice that any functor from C×C is a bifunctor, so Product is a bifunctor. In Haskell, the Product bifunctor is written simply as (,). You can apply it to two types and get their product type, for instance:

(,) Int Bool ~ (Int, Bool)

Exponential from Adjunction

The exponential ba, or the function object a⇒b, can be defined using a universal construction. This construction, if it exists for all pairs of objects, can be seen as an adjunction. Again, the trick is to concentrate on the statement:

For any other object

zwith a morphismg :: z × a -> bthere is a unique morphism

h :: z -> (a⇒b)

This statement establishes a mapping between hom-sets.

In this case, we are dealing with objects in the same category, so the two adjoint functors are endofunctors. The left (endo-)functor L, when acting on object z, produces z × a. It’s a functor that corresponds to taking a product with some fixed a.

The right (endo-)functor R, when acting on b produces the function object a⇒b (or ba). Again, a is fixed. The adjunction between these two functors is often written as:

- × a ⊣ (-)a

The mapping of hom-sets that underlies this adjunction is best seen by redrawing the diagram that we used in the universal construction.

Notice that the eval morphism is nothing else but the counit of this adjunction:

(a⇒b) × a -> b

where:

(a⇒b) × a = (L ∘ R) b

I have previously mentioned that a universal construction defines a unique object, up to isomorphism. That’s why we have “the” product and “the” exponential. This property translates to adjunctions as well: if a functor has an adjoint, this adjoint is unique up to isomorphism.

Challenges

- Derive the naturality square for

ψ, the transformation between the two (contravariant) functors:a -> C(L a, b) a -> D(a, R b)

- Derive the counit

εstarting from the hom-sets isomorphism in the second definition of the adjunction. - Complete the proof of equivalence of the two definitions of the adjunction.

- Show that the coproduct can be defined by an adjunction. Start with the definition of the factorizer for a coproduct.

- Show that the coproduct is the left adjoint of the diagonal functor.

- Define the adjunction between a product and a function object in Haskell.

Next: Free/Forgetful Adjunctions.

Acknowledgments

I’d like to thank Edward Kmett and Gershom Bazerman for checking my math and logic, and André van Meulebrouck, who has been volunteering his editing help throughout this series of posts.

Follow @BartoszMilewski

April 19, 2016 at 3:44 pm

I was just studying adjunctions for my Category Theory class and thought of writing an e-mail to ask you about it. Came to check your website and BAM! Amazing. I appreciate your work so much and can’t wait for the remaining parts!

April 23, 2016 at 6:20 am

I just discovered your book and I was wondering if it is possible to translate it in an other language? In that case, what would be your terms? I didn’t see any licence on the blog…

April 23, 2016 at 10:16 am

I’ve seen a Russian translation of the first few installments of the book. Are we talking of a translation in the blog form? If so, feel free as long as you provide links back to the original. What language? French?

April 23, 2016 at 12:11 pm

The translation would be in French. I was thinking to put it on a french website where there are a lot of tutorials, mostly about IT : http://www.zestedesavoir.com . Here is an example of a tutorial : https://zestedesavoir.com/tutoriels/674/apprenez-la-programmation-fonctionnelle-avec-haskell/ .

June 9, 2016 at 4:35 am

Thank you so much… I have just finished reading all the chapters until now.

I haven’t studied maths since I left uni, and it has been a real pleasure reading this blog. Smooth and simple. This is really valuable for me and I am sure, a lot of devs will find it very useful.

Thanks again. Can’t wait for the next chapters!

December 20, 2016 at 1:54 am

This paragraph in the introduction is giving me some problems:

“did you notice that we used equality of morphisms every time we talked about commuting diagrams? That’s because morphisms form a set (hom-set) and set elements can be compared for equality.”

So, in a not locally small category, in which hom-sets are not sent, can’t we form commuting diagrams? How can we define then associativity and identity?

December 20, 2016 at 11:02 am

@Juan Manuel: Yes, it’s tricky! The idea is that you replace hom-sets with objects from some category. You work with a category that’s “enriched” over a base category. That base category has hom-sets, so you can form commuting diagrams in it. That way you always bottom up at sets, but you postpone it as much as possible.

December 21, 2016 at 1:33 am

Sets give you comparison because sets are all about membership and to answer to membership questions you need equal comparison between elements.

So if you have sets of morphisms (hom-sets) you can form commuting diagrams because morphisms are comparable.

If you don’t, you have hom-objects in an enriched category. Then you need to compare objects but, to do it, if this enriched category is small, you can compare the hom-sets of the hom-objects and, if they are equal, both objects are equal, aren’t they?

It’s kind of definim equals on lists using equals on the elements of lists.

Am I on the right track?

December 21, 2016 at 9:06 am

You’re on the right track, except that you never want to compare objects for equality. You formulate all laws as commutative diagrams in the base category. Have a look at my post about impoverished categories.

August 29, 2017 at 6:00 pm

a typo: “The main difference between universal constructions and adjunctions it that the latter are defined globally” should be “is that” ?

March 20, 2018 at 1:19 am

At the triangular identities: “the arrows are natural transformations, and their composition is the horizontal composition” – shouldn’t it be vertical composition?

March 20, 2018 at 5:38 am

Vertical composition is for natural transformation between three functors that all share the common source and the common target category. Here we compose, for instance, L after η. L stands for identity natural transformation from L to L, a functor that goes from D to C. η is a natural transformation from ID to R∘L, both going from D to D. So we go horizontally, first from D to D and then from D to C.

July 9, 2018 at 8:13 pm

R εc ∘ η R c = id R c ??

May be they should be

η R c ∘ R ε c = id R c

I think the diagrams commute have some mistake.

It should be this https://zhuanlan.zhihu.com/p/39399260

July 9, 2018 at 10:44 pm

L (R c) -> c and c -> L (R c) are not the same.

εc ‘

::(L∘R)c – > c

but

in Rεc ‘

:: R(L(R c)) – > R c

εc ‘

:: c – >(L∘R)c

July 9, 2018 at 10:53 pm

R εc’ :: R (L (R c)) -> R c == R c’ -> R c == η /= id

July 9, 2018 at 11:19 pm

I’m sorry to write R εc’ :: R (L (R c)) -> R c == R c’ -> R c == η /= id. I am less thinking . But this is my think picture https://pic4.zhimg.com/v2-0d7cbd35c4665c37238b1989737fb743_r.jpg

July 10, 2018 at 9:20 am

I made some changes. Does it make sense now?

July 10, 2018 at 12:06 pm

When I learn this article,I build a example to help me to understand adjunctions (https://zhuanlan.zhihu.com/p/39426618). But in my example, R ε c ∘ η R c = id R c is strange wrong! (https://zhuanlan.zhihu.com/p/39467153)

I don’t know whether the example is right. But it is really beautiful. (https://zhuanlan.zhihu.com/p/39467250)

July 10, 2018 at 3:45 pm

Remember that ε and η have to satisfy naturality conditions. In your example, this only works when the arrows are the inverse of each other (1->2 being the inverse of 2->1, and so on).

July 10, 2018 at 6:14 pm

Two ε are equal and satisfy naturality conditions. For example : (L (R 1)) = 2 then ε 2 = 1 .

ε is decided by L∘R . ε is always equal and don’t need inverse of each other.

ε: 1 -> 3 , 2 -> 1, 3 -> 2

(https://zhuanlan.zhihu.com/p/39471131)

July 11, 2018 at 2:03 pm

Notice that ε2 is not an object, it’s a morphism. In your case, it’s a morphism from 2 to 1 (from L(R 1) to Id 1)

July 17, 2018 at 12:56 am

L∘η – this is horizontal composition

(L∘η) ∘ (ε∘L) – this is vertical composition

right?

July 17, 2018 at 11:10 am

Correct. I should probably clarify this in the text. Consistent with the notation I used elsewhere, the middle dot should be solid.

July 27, 2018 at 3:08 am

Challenge 1.

Derived (similarly to φ): ψ g = ε c ∘ L g.

But what does it mean ”Derive the naturality square” ? You did not do that for φ 🙂

October 27, 2018 at 10:50 am

Could you please elaborate a little bit what do you mean by that?

In an arbitrary category we need equality for objects in order to compose morphisms. We can compose

g ∘ fiffdom(g) = cod(f), wheredom(g)andcod(f)are objects. These objects might represent some mathematical constructs with a vague notion of equality, but it has to be well-defined the moment we start talking about objects in a category.We can define equality for objects in terms of their

idmorphisms:∀ a,b in category C: a = b ⇔ ∃ ida ∘ idbI guess, in general, we don’t have the notion of morphism equality in an arbitrary category though, and that’s the reason we cannot define functor equality in terms of equality of objects and morphisms.

October 27, 2018 at 1:58 pm

This is one of these tricky foundational questions that I tried to avoid. Strictly speaking there are two different definition of a category, depending on whether you consider one big collection of morphisms together with the source and target mappings to objects, or a separate collection of morphisms for every pair of objects (the hom-set) and a mapping between pairs of hom-sets as composition. Even if you take the first definition (which is what you’re considering), there are different ways of understanding the equality of objects. There is the definitional equality, as “literally the same object” and there is the propositional equality that requires a proof. If you have two different functors mapping the same object, you need a proof that the target objects are equal. If you want to dive deeper into this topic, here’s a good starting point: https://ncatlab.org/nlab/show/principle+of+equivalence .

October 29, 2018 at 9:40 am

Thanks, I think I understand now what you mean.

But I still struggle understanding if we need the notion of object equality for definitions of

idand∘. To me it seems like we need it in both definitions of a category that you mentioned. We just might want to relax or completely avoid the notion for other definitions, like in the case of functors equality, as it would unnecessarily inflict proving that involved objects are the same. In other words it seems like we still have the notion, even though arbitrary category for any pair of objects we cannot prove the equality.Thanks for your effort explaining the material!

December 3, 2018 at 9:41 am

What are adjunctive squares? Would they be helpful to show for example the commutativity found in universal construction definition of the product? I found that it’s not very obvious from the adjuction definition (for the product).

December 5, 2018 at 11:25 am

I have never heard the term “adjunctive squares.”

December 6, 2018 at 1:07 am

I found it in some papers. Something like this for product. I don’t know why it seemed clearer.

May 21, 2019 at 9:13 am

“set elements can be compared for equality” — are we talking about any set or specifically sets of morphisms? why can’t we define equality on classes for categories that are not locally small?

checking if 2 functors are the same — why can’t we use an identity natural transformation that assigns identity morphism to each object for a definition of equality?

May 22, 2019 at 9:33 am

It’s more a matter of philosophy of category theory. Here’s a quote from nLab: “The most common source of the breaking of equivalence-invariance is a statement that two objects of a given category are equal. One should instead say that they are isomorphic.”

October 16, 2019 at 11:41 am

In section where you talk about “Adjunction and Hom-Sets”, you talk about two functors F1: c -> C(Ld, c) and F2: c -> D(d, Rc) which in between we have the natural transformation Psi.

What is the relationship between those two functors and functors L and R? Is it that F1 = L . R and F2 = R . L ?

October 16, 2019 at 12:32 pm

I’m afraid there is no simple relationship.

October 16, 2019 at 1:45 pm

You say:

We know that the left hand side must contain at least one morphism, the identity. The natural transformation will map this morphism to an element of D(d, (R ∘ L) d) or, inserting the identity functor I, a morphism in:

D(I d, (R ∘ L) d)

We get a family of morphisms parameterized by d. They form a natural transformation between the functor I and the functor R ∘ L (the naturality condition is easy to verify). This is exactly our unit, η.

You mentioned two natural transformations which I assume are not the same! If so would you please tell me on which functors and categories each get applied?

Thanks.

October 16, 2019 at 3:17 pm

The first natural transformation is between the functors you call F1 and F2. The second is as described.

December 19, 2019 at 5:51 pm

I don’t see how the “triangular identities” are derived from the definition of unit and co-unit. Could you please expand on this point?

I understand that composing the natural transformations $L \circle \eta$ and $\epsilon \circle L$ gives a natural transformation between $L$ and itself $L$ for example, but why does this natural transformation need to be the identity?

December 20, 2019 at 1:24 pm

They are not derived; they are imposed. You can define the adjunction either using natural isomorphism between hom-sets, or implementing unit/counit that satisfies triangular identities.

December 23, 2019 at 9:22 am

You mentioned that “To summarize what we have done: A categorical product may be defined globally as the right adjoint of the diagonal functor”

It seems that we can use adjunction to define universal construction globally, use limit/colimit to define universal construction locally, Right?

For example, we can use limit to define a categorical product of two specific objects a and b in some category. But by adjunction, we can only define categorical product globally. That means if we have a adjunction Δ ⊣ Product between CxC and C, any pair of objects in C has a categorical product (it is very like cartesian closure category, except terminal object and exponential), Right?

Can we use adjunction to define all universal construction?

Of course, we have already known that some universal construction (e.g. exponential cannot be defined by limit/colimit).

The question is what’s the relation between limit/colimit, adjunction and universal construction?

December 23, 2019 at 11:05 am

Universal constructions are the most general. Limits and colimits are defined using them. Adjunction can also be defined using universal constructions but, as you noticed, they are defined globally.

January 27, 2021 at 6:21 pm

I’m having trouble following why the natural isomorphism C(Ld,c) ~= D(d, Rc) implies the existence of ε and η. I follow that this isomorphism means there’s an isomorphism C(L d, L d) ~= D(I d, (R ∘ L) d). And so for any object d there must be at least one morphism in D(I d, (R ∘ L) d), mapped from id in C(L d, L d). But how does one use this family of morphisms to construct a transformation that satisfies the naturality condition?

January 27, 2021 at 8:08 pm

In cases like this, naturality proof is pretty easy, if you carefuly write the commuting conditions. Hom sets have very simple functorial properties that boil down to either pre-composition or post-composition. It’s just some diagram chasing. But it’s a good exercise.

April 14, 2022 at 12:19 am

In challenge 5, I try to prove the naturality of the (

rightAdjunct, right?)factorizer, i.e. that for all morphisms<f, g>from<a,b>to<a',b'>, it isG <f, g> ∘ factorizer = factorizer ∘ F <f, g>, whereFis the<a, b> -> C(coproduct <a, b>, c)functor andGis the<a, b> -> CxC(<a, b>, Δ c)functor (right?). Being therightAdjunct,factorizermaps<a,b> -> <c,c>morphisms tocoproduct <a, b> -> cmorphisms.G <f, g> = D(<f, g>, R c)is a morphism from the hom-setCxC(<a, b>, Δ c)to the hom-setCxC(<a', b'>, Δ c'). The “types” don’t match! The output offactorizeriscoproduct <a, b> -> c, notCxC(<a, b>, Δ c), asG <f, g>expects. Where’s the mistake?April 14, 2022 at 12:38 pm

The two functors are:

They are contravariant bifunctors, so let’s define what it means:

Their action on morphisms is defined by:

instance CBfunctor (F c) where cdimap f g (F h) = F h' where h' (Left a') = h (Left (f a')) h' (Right b') = h (Right (g b'))April 15, 2022 at 12:29 pm

With you so far. Proving the naturality of

factorizermeans showing thatG f ∘ factorizer = factorizer ∘ F f. The problem is thatfactorizeroutputsEither a b -> c, whereasG fexpects input(a -> c) (b -> c). That’s a type mismatch, so there goes the naturality proof. Where am I wrong?April 15, 2022 at 1:45 pm

Naturality condition in this case is:

fact . G f g = F f g . fact.April 15, 2022 at 2:46 pm

I think I get it. The naturality condition is of this form because

FandGare contravariant?April 15, 2022 at 3:44 pm

There is only one way to draw the naturality square with:

factorizer :: G c a b -> F c a b factorizer (G f g) = F h where h (Left a) = f a h (Right b) = g bIt’s also a good exercise to show naturality in c (in which both functors are covariant).

April 16, 2022 at 3:43 am

I drew the diagrams and verified naturality. It all fits now, thanks! I also understood why I was confused in the first place. Halfway through this chapter there is:

I interpreted this to mean “

ψacts fromd -> C(L d, c)tod -> D(d, R c)“, but in fact it acts fromd -> D(d, R c)tod -> C(L d, c). I suggest to swap the two functors for clarity.April 16, 2022 at 9:42 am

It’s an isomorphism, so it acts both ways.

September 16, 2022 at 11:46 am

After finishing reading the blog post the 3rd time, I still don’t get it. The part where you introduce the adjoint is pretty clear, but I fail to understand how you use it to define product or exponential.

September 23, 2022 at 12:33 pm

Why there is no fst and snd morphisms in the definition of the product as an adjunction?

September 23, 2022 at 2:56 pm

It is there, but it’s hidden. Replace c with a×b in this formula:

(C × C)(Δ c, <a, b>) ≅ C(c, a×b)

Corresponding to id on the right, you have a pair <fst, snd> on the left. It’s the counit of this adjunction.

December 11, 2022 at 6:35 am

I’m trying to solve ex. 6, but, in trying to implement

Representablefor the function object, I cannot find a valid definition fortabulate.If I define the function object as

data FOT a b = FOV a b, then (if I understand correctly)(FOT a)isRepresentable. However it’s unclear to me how to express “for all valuesx :: a,tabulate gis FOV x (g x)” in Haskell (or even whether that’s what we want to express).If instead I define the function object as

data FOT f = FOV f, then I very quickly run into higher-kinded trouble, mainly becausefthen is* -> *, whereasRep FOT :: *. I even tried to change theRepresentable ftypeclass to haveRep f :: * -> *, to no avail. I anyway suspect that this second approach is inferior.What is the correct approach? What am I missing?

December 11, 2022 at 8:30 am

The correct definition of the function object would be

It’s represented by type

a, and tabulate is justFOV.