Lenses are a fascinating subject. Edward Kmett’s lens library is an indispensable tool in every Haskell programmer’s toolbox. I set out to write this blog post with the goal of describing some new insights into their categorical interpretation, but then I started reviewing all the different formulations of lenses and their relations to each other. So this post turned into a little summary of the theoretical underpinning of lenses.

If you’re already familiar with lenses, you may skip directly to the last section, which describes some new results.

The Data-Centric Picture

Lenses start as a very simple idea: an accessor/mutator or getter/setter pair — something familiar to every C++, Java, or C# programmer. In Haskell they can be described as two functions:

get :: s -> a set :: s -> a -> s

Given an object of type s, the first function produces the value of the object’s sub-component of type a — which is the focus of the particular lens. The second function takes the object together with a new value for the sub-component, and produces a modified object.

A simple example is a lens that focuses on the first component of a pair:

get :: (a, a') -> a get (x, y) = x

set :: (a, a') -> a -> (a, a') set (x, y) x' = (x', y)

In Haskell we can go even further and define a lens for a polymorphic object, in which the setter changes not only the value of the sub-component, but also its type. This will, of course, also change the type of the resulting object. So, in general we have:

get :: s -> a set :: s -> b -> t

Our pair example doesn’t change much on the surface:

get :: (a, a') -> a get (x, y) = x

set :: (a, a') -> b -> (b, a') set (x, y) x' = (x', y)

The difference is that the type of x' can now be different from the type of x. The first component of the pair is of type a before the update, and of type b (the same as that of x') after it. This way we can turn a pair of, say, (Int, Bool) to a pair of (String, Bool).

We can always go back to the monomorphic version of the lens by choosing b equal to a and t equal to s.

Not every pair of functions like these constitutes a lens. A lens has to obey a few laws (first formulated by Pierce in the database context). In particular, if you get a component after you set it, you should get back the value you just put in:

get . set s = id

If you call set with the value you obtained from get, you should get back the unchanged object:

set s (get s) = s

Finally, a set should overwrite the result of a previous set:

set (set s a) b = set s b

So this is what I would call a “classical” lens. It’s formulated in easy to understand programming terms.

The Algebraic Picture

It was Russell O’Connor who noticed that when you refactor the common element from the getter/setter pair, you get an interesting algebraic structure. Instead of writing the lens as two functions, we can write it as one function returning a pair:

s -> (a, b -> t)

Think of this as factoring out the “this” pointer in OO and returning the interface. Of course, the difference is that, in functional programming, the setter does not mutate the original — it returns a new version of the object instead.

Let’s for a moment concentrate on the monomorphic version of the lens — one in which a is the same as b, and s is the same as t. We can define a data structure:

data Store a s = Store a (a -> s)

and rewrite the lens as:

s -> Store a s

For instance, our first-component-of-a-pair lens will take the form:

fstl :: (a, b) -> Store a (a, b) fstl (x, y) = Store x (\x' -> (x', y))

The first observation is that, for any a, Store a is a functor:

instance Functor (Store a) where

fmap f (Store x h) = Store x (f . h)

It’s a well-known fact that you can define algebras for a functor, so-called F-algebras. An algebra for a functor f consists of a type s called the carrier type and a function called the action:

alg :: f s -> s

This is almost like a lens (with f replaced by Store a), except that the arrow goes the wrong way. Not to worry: there is a dual notion called a coalgebra. It consists of a carrier type s and a function:

coalg :: s -> f s

Substitute Store a for f and you see that a lens is nothing but a coalgebra for this functor. This is not saying much — we have just given a mathematical name to a programming construct, no big deal. Except that Store a is more than a functor — it’s also a comonad.

What’s a comonad? It’s a monad with the arrows reversed.

You know that you can define a monad in Haskell using return and join. Reverse the arrows on those two, and you get extract and duplicate, the two functions that define a comonad.

class (Functor w) => Comonad w where

extract :: w a -> a

duplicate :: w a -> w (w a)

Here, w is a type constructor that is also a functor.

This is how you can implement those two functions for Store a:

instance Comonad (Store a) where

-- extract :: Store a s -> s

extract (Store x h) = h x

-- duplicate :: Store a s -> Store a (Store a s)

duplicate (Store x h) = Store x (\y -> Store y h)

So now we have two structures: a comonad w and a coalgebra:

type Coalgebra w s = s -> w s

A lens is a special case of this coalgebra where w is Store a.

Every time you have two structures, you may legitimately ask the question: Are they compatible? Just by looking at types, you may figure out some obvious compatibility conditions. In particular, since they go the opposite way, it would make sense for extract to undo the action of coalg:

coalg :: Coalgebra w s extract . coalg = id

Also, duplicating the result of coalg should be the same as applying coalg twice (the second time lifted by fmap):

fmap coalg . coalg = duplicate . coalg

If these two conditions are satisfied, we call coalg a comonad coalgebra.

And here’s the clencher:

These two conditions when applied to the Store a comonad are equivalent to our earlier lens laws.

Let’s see how it works. First we’ll express the result of our lens coalgebra acting on some object s in terms of get and set (curried set s is a function a->s):

coalg s = Store (get s) (set s)

The first condition extract . coalg = id immediately gives us the law:

set s (get s) = s

When we act with duplicate on coalg s, we get:

Store (get s) (\y -> Store y (set s))

On the other hand, when we fmap our coalg over coalg s, we get:

Store (get s) ((\s' -> Store (get s') (set s')) . set s)

Two functions — the second components of the Store objects in those equations — must be equal when acting on any a. The first function produces:

Store a (set s)

In the second one, we first apply set s to a to get set s a, which we then pass to the lambda to get:

Store (get (set s a)) (set (set s a))

This reproduces the other two (monomorphic) lens laws:

get (set s a) = a

and

set (set s a) = set s

This algebraic construction can be extended to type-changing lenses by replacing Store with its indexed version:

data IStore a b t = IStore a (b -> t)

and the comonad with its indexed counterpart. The indexed store is also called Context in the lens parlance, and an indexed comonad is also called a parametrized comonad.

So what’s an indexed comonad? Let’s start with an indexed functor. It’s a type constructor that takes three types, a, b, and s, and is a functor in the third argument:

class IxFunctor f where

imap :: (s -> t) -> f a b s -> f a b t

IStore is obviously an indexed functor:

instance IxFunctor IStore where

-- imap :: (s -> t) -> IStore a b s -> IStore a b t

imap f (IStore x h) = IStore x (f . h)

An indexed comonad has the indexed versions of extract and duplicate:

class IxComonad w where

iextract :: w a a t -> t

iduplicate :: w a b t -> w a j (w j b t)

Notice that iextract is “diagonal” in the index types, whereas the double application of w shares one index, j, between the two applications. This plays very well with the unit and multiplication interpretation of a monad — here it looks just like matrix multiplication (although we are dealing with a comonad rather than a monad).

It’s easy to see that the instantiation of the indexed comonad for IStore works the same way as the instantiation of the comonad for Store. The types just work out that way.

instance IxComonad IStore where

-- iextract :: IStore a a t -> t

iextract (IStore a h) = h a

-- iduplicate :: IStore a b t -> IStore a c (IStore c b t)

iduplicate (IStore a h) = IStore a (\c -> IStore c h)

There is also an indexed version of a comonad coalgebra, where the coalgebra is replaced by a family of mappings from some carrier type s to w a b t; with the type t determined by s together with the choice of of the indexes a and b:

type ICoalg w s t a b = s -> w a b t

The compatibility conditions that make it an (indexed) comonad coalgebra are almost identical to the standard compatibility conditions, except that we have to be careful about the index types. Here’s the first condition:

icoalg_aa :: ICoalg w s t a a iextract . icoalg_aa = id

Let’s analyze the types. The inner part has the type:

icoalg_aa :: s -> w a a t

We apply iextract to it, which has the type:

iextract :: w a a t -> t

and get:

iextract . icoalg_aa :: s -> t

The right hand side of the condition has the type:

id :: s -> s

It follows that, for the diagonal components of ICoalg w s t, t must be equal to s. The diagonal part of ICoalg w s t is therefore a family of regular coalgebras.

As we have done with the monomorphic lens, we can express (ICoalg IStore s t a b), when acting on s, in terms of get and set:

icoalg_ab s = IStore (get s) (set s)

But now get s is of type a, while set s if of the type b -> t. We can still apply extract to the diagonal term IStore a a t as required by the first compatibility condition. When equating the result to id, we recover the lens law:

set s (get s) = s

Similarly, it’s straightforward to see that the second compatibility condition:

icoalg_bc :: ICoalg w s t b c icoalg_ab :: ICoalg w s t a b icoalg_ac :: ICoalg w s t a c imap icoalg_bc . icoalg_ab = iduplicate . icoalg_ac

is equivalent to the other two lens laws.

The Parametric Picture

Despite being theoretically attractive, standard lenses were awkward to use and, in particular, to compose. The breakthrough came when Twan van Laarhoven realized that there is a higher-order representation for them that has very nice compositional properties. Composing lenses to focus on sub-objects of sub-objects turned into simple function composition.

Here’s Twan’s representation (generalized by Russell for the polymorphic case):

type Lens s t a b = forall f. Functor f => (a -> f b) -> (s -> f t)

So a lens is a polymorphic higher order function with a twist. The twist is that it’s polymorphic with respect to a functor rather than a type.

You can think of it this way: the caller provides a function to modify a particular field of s, turning it from type a to f b. What the caller gets back is a function that transforms the whole of s to f t. The idea is that the lens knows how to reconstruct the object, while putting it under a functor f — if you tell it how to modify a field, also under this functor.

For instance, continuing with our example, here’s the van Laarhoven lens that focuses on the first component of a pair:

vL :: Lens (a, c) (b, c) a b vL h (x, x') = fmap (\y -> (y, x')) (h x)

Here, s is (a, c) and t is (b, c).

To see that the van Laarhoven representation is equivalent to the get/set one, let’s first change the order of arguments and pull s outside of the forall quantifier:

Lens s t a b = s -> (forall f. Functor f => (a -> f b) -> f t)

Here’s how you can read this definition: For a given s, if you give me a function from a to f b, I will produce a value of type f t. And I don’t care what functor you use!

What does it mean not to care about the functor? It means that the lens must be parametrically polymorphic in f. It can’t do case analysis on a functor. It must be implemented using the same formula for f being the list functor, or the Maybe functor, or the Const functor, etc. There’s only one thing all these functors have in common, and that’s the fmap function; so that’s what we are allowed to use in the implementation of the lens.

Now let’s think what we can do with a function a -> f b that we were given. There’s only one thing: apply it to some value of type a. So we must have access to a value of type a. The result of this application is some value of type f b, but we need to produce a value of the type f t. The only way to do it is to have a function of type b->t and sneak it under the functor using fmap (so here’s where the generic functor comes in). We conclude that the implementation of the function:

forall f. Functor f => (a -> f b) -> f t

must be hiding a value of type a and a function b->t. But that’s exactly the contents of IStore a b t. Parametricity tells us that there is an IStore hiding inside the van Laarhoven lens. The lens is equivalent to:

s -> IStore a b t

In fact, with a clever choice of functors we can recover both get and set from the van Laarhoven representation.

First we select our functor to be Const a. Note that the parameter a is not the one over which the functor is defined. Const a takes a second parameter b over which it is functorial. And, even though it takes b as a type parameter, it doesn’t use it at all. Instead, like a magician, it palms an a, and then reveals it at the end of the trick.

newtype Const a b = Const { getConst :: a }

instance Functor (Const a) where

-- fmap :: (s -> t) -> Const a s -> Const a t

fmap _ (Const a) = (Const a)

The constructor Const happens to be a function

Const :: a -> Const a b

which has the required form to be the first argument to the lens:

a -> f b

When we apply the lens, let’s call it vL, to the function Const, we get another function:

vL Const :: s -> Const a t

We can apply this function to s, and then, in the final reveal, retrieve the value of a that was smuggled inside Const a:

get vL s = getConst $ vL Const s

Similarly, we can recover set from the van Laarhoven lens using the Identity functor:

newtype Identity a = Identity { runIdentity :: a }

We define:

set vL s x = runIdentity $ vL (Identity . const x) s

The beauty of the van Laarhoven representation is that it composes lenses using simple function composition. A lens takes a function and returns a function. This function can, in turn, be passed as the argument to another lens, and so on.

There’s an interesting twist to this kind of composition — the function composition operator in Haskell is the dot, just like the field accessor in OO languages like Java or C++; and the composition follows the same order as the composition of accessors in those languages. This was first observed by Conal Elliott in the context of semantic editor combinators.

Consider a lens that focuses on the a field inside some object s. It’s type is:

Lens s t a b

When given a function:

h :: a -> f b

it returns a function:

h' :: s -> f t

Now consider another lens that focuses on the s field inside some even bigger object u. It’s type is:

Lens u w s t

It expects a function of the type:

g :: s -> f t

We can pass the result of the first lens directly to the second lens to form a composite:

Lens u w s t . Lens s t a b

We get a lens that focuses on the a field of the object s that is the sub-object of the big object u. It works just like in Java, where you apply a dot to the result of a getter or a setter, to dig deeper into a subobject.

Not only do lenses compose using regular function composition, but we can also use the identity function as the identity lens. So lenses form a category. It’s time to have a serious look at category theory. Warning: Heavy math ahead!

The Categorical Picture

I used parametricity arguments to justify the choice of the van Laarhoven representation for the lens. The lens function is supposed to have the same form for all functors f. Parametricity arguments have an operational feel to them, which is okay, but I feel like a solid categorical justification is more valuable than any symbol-shuffling argument. So I worked on it, and eventually came up with a derivation of the van Laarhoven representation using the Yoneda lemma. Apparently Russell O’Connor and Mauro Jaskelioff had similar feelings because they came up with the same result independently. We used the same approach, going through the Store functor and applying the Yoneda lemma twice, once in the functor category, and once in the Set category (see the Bibliography).

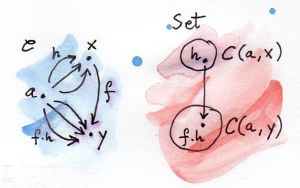

I would like to present the same result in a more general setting of the Yoneda embedding. It’s a direct consequence of the Yoneda lemma, and it states that any category can be embedded (fully and faithfully) in the category of functors from that category to Set.

Here’s how it works: Let’s fix some object a in some category C. For any object x in that category there is a hom-set C(a, x) of morphisms from a to x. A hom-set is a set — an object in the category Set of sets. So we have defined a mapping from C to Set that takes an x and maps it to the set C(a, x). This mapping is called C(a, _), with the underscore serving as a placeholder for the argument.

It’s easy to convince yourself that this mapping is in fact a functor from C to Set. Indeed, take any morphism f from x to y. We want to map this morphism to a function (a morphism in Set) that goes between C(a, x) and C(a, y). Let’s define this lifted function component-wise: given any element h from C(a, x) we can map it to f . h. It’s just a composition of two morphisms from C. The resulting morphism is a member of C(a, y). We have lifted a morphism f from C to Set thus establishing that C(a, _) is a functor.

Now consider two such functors, C(a, _) and C(b, _). The Yoneda embedding theorem tells us that there is a one-to-one correspondence between the set of natural transformations between these two functors and the hom-set C(b, a).

Nat(C(a, _), C(b, _)) ≅ C(b, a)

Notice the reversed order of a and b on the right-hand side.

Let’s rephrase what we have just seen. For every a in C, we can define a functor C(a, _) from C to Set. Such a functor is a member of the functor category Fun(C, Set). So we have a mapping from C to the functor category Fun(C, Set). Is this mapping a functor?

We have just seen that there is a mapping between morphisms in C and natural transformations in Fun(C, Set) — that’s the gist of the Yoneda embedding. But natural transformations are morphisms in the functor category. So we do have a functor from C to the functor category Fun(C, Set). It maps objects to objects and morphisms to morphisms. It’s a contravariant functor, because of the reversal of a and b. Moreover, it maps the hom-sets in the two categories one-to-one, so it’s a fully faithful functor, and therefore it defines an embedding of categories. Every category C can be embedded in the functor category Fun(C, Set). That’s called the Yoneda embedding.

There’s an interesting consequence of the Yoneda embedding: Every functor category can be embedded in its own functor category — just replace C with a functor category in the Yoneda embedding. Recall that functors between any two categories form a category. It’s a category in which objects are functors and morphisms are natural transformations. Yoneda embedding works for that category too, which means that a functor category can be embedded in a category of functors from that functor category to Set.

Let’s see what that means. We can fix one functor, say R and consider the hom-set from R to some arbitrary functor f. Since we are in a functor category, this hom-set is a set of natural transformations between the two functors, Nat(R, f).

Now let’s pick another functor S. It also defines a set of natural transformations Nat(S, f). We can keep picking functors and mapping them to sets (sets of natural transformations). In fact we know from the previous argument that this mapping is itself a functor. This time it’s a functor from a functor category to Set.

What does the Yoneda embedding tell us about any two such functors? That the set of natural transformations between them is isomorphic to the (reversed) hom-set. But this time hom-sets are sets of natural transformations. So we have:

Nat(Nat(R, _), Nat(S, _)) ≅ Nat(S, R)

All natural transformations in this formula are regular natural transformation except for the outer one, which is more interesting. You may recall that a natural transformation is a family of morphisms parameterized by objects. But in this case objects are functors, and morphisms are themselves natural transformations. So it’s a family of natural transformations parameterized by functors. Keep this in mind as we proceed.

To get a better feel of what’s happening, let’s translate this to Haskell. In Haskell we represent natural transformations as polymorphic functions. This makes sense, since a natural transformation is a family of morphisms (here functions) parameterized by objects (here types). So a member of Nat(R, f) can be represented as:

forall x. R x -> f x

Similarly, the second natural transformation in our formula turns into:

forall y. S y -> f y

As I said, the outer natural transformation in the Yoneda embedding is a family of natural transformations parameterized by a functor, so we get:

forall f. Functor f => (forall x. R x -> f x) -> (forall y. S y -> f y)

You can already see one element of the van Laarhoven representation: the quantification over a functor.

The right hand side of the Yoneda embedding is a natural transformation:

forall z. S z -> R z

The next step is to pick the appropriate functors for R and S. We’ll take R to be IStore a b and S to be IStore s t.

Let’s work on the first part:

forall x. IStore a b x -> f x

A function from IStore a b x is equivalent to a function of two arguments, one of them of type a and another of type b->x:

forall x. a -> (b -> x) -> f x

We can pull a out of forall to get:

a -> (forall x. (b -> x) -> f x)

If you squint a little, you recognize that the thing in parentheses is a natural transformation between the functor C(b, _) and f, where C is the category of Haskell types. We can now apply the Yoneda lemma, which says that this set of natural transformations is isomorphic to the set f b:

forall x. (b -> x) -> f x ≅ f b

We can apply the same transformation to the second part of our identity:

forall y. (IStore s t y -> f y) ≅ s -> f t

Taking it all together, we get:

forall f. Functor f => (a -> f b) -> (s -> f t)

≅ forall z. IStore s t z -> IStore a b z

Let’s now work on the right hand side:

forall z. IStore s t z -> IStore a b z

≅ forall z. s -> (t -> z) -> IStore a b z

Again, pulling s out of forall and applying the Yoneda lemma, we get:

s -> IStore a b t

But that’s just the standard representation of the lens:

s -> IStore a b t ≅ (s -> a, s -> b -> t) = (get, set)

Thus the Yoneda embedding of the functor category leads to the van Laarhoven representation of the lens:

forall f. Functor f => (a -> f b) -> (s -> ft) ≅ (s -> a, s -> b -> t)

Playing with Adjunctions

This is all very satisfying, but you may wonder what’s so special about the IStore functor? The crucial step in the derivation of the van Laarhoven representation was the application of the Yoneda lemma to get this identity:

forall x. IStore a b x -> f x ≅ a -> f b

Let’s rewrite it in the more categorical language:

Nat(IStore a b, f) ≅ C(a, f b)

The set of natural transformations from the functor IStore a b to the functor f is isomorphic to the hom-set between a and f b. Any time you see an isomorphism of hom-sets (and remember that Nat is the hom-set in the functor category), you should be on the lookout for an adjunction. And indeed, we have an adjunction between two functors. One functor is defined as:

a -> IStore a b

It takes an object a in C and maps it to a functor IStore a b parameterized by some other object b. The other functor is:

f -> f b

It maps a functor, an object in the functor category, to an object in C. This functor is also parameterized by the same b. Since this is a flipped application, I’ll call it Flapp:

newtype Flapp b f = Flapp (f b)

So, for any b, the functor-valued functor IStore _ b is left adjoint to Flapp b. This is what makes IStore special.

As a side note: IStore a b is a covariant functor in a and a contravariant functor in b. However, Store a is not functorial in a, because a appears in both positive and negative position in its definition. So the adjunction trick doesn’t work for a simple (monomorphic) lens.

We can now turn the tables and use the adjunction to define the functor IStore in an arbitrary category (notice that the Yoneda lemma worked only for Set-valued functors). We just define a functor-valued functor IStore to be the left adjoint to Flapp, provided it exists.

Nat(IStore a b, f) ≅ C(a, f b)

Here, Nat is a set of natural transformations between endofunctors in C.

We can substitute the so defined functor into the Yoneda embedding formula we used earlier:

Nat((Nat(IStore a b, f), Nat(IStore s t, f))

≅ Nat(IStore s t, IStore a b)

We can now use the adjunction, rather than the Yoneda lemma, to eliminate some of the occurrences IStore:

Nat(C(a, f b), C(s, f t))

≅ C(s, IStore a b t)

This is slightly more general than the original van Laarhoven equivalence.

We can go even farther and reproduce the Jaskelioff and O’Connor trick of constraining the generic functor in the definition of the van Laarhoven lens to a pointed or applicative functor. This results in a multi-focus lens. In particular, if we use pointed functors, we get lenses with zero or one targets, so called affine lenses. Restricting the functors further to applicative leads to lenses with any number of targets, or traversals.

The trick is that any pointed or applicative functor can be stripped of the additional functionality and treated just like any other functor. This act of “forgetting” about pure and <*> may itself be considered a functor in the functor category. It’s called, appropriately, a forgetful functor. The left adjoint to a forgetful functor (if it exists) is called a free functor. It takes an arbitrary functor and creates a pointed functor by generating an artificial pure; or it creates an applicative functor by adding <*>. This adjunction is described by a natural isomorphism of hom-sets — in this case sets of natural transformations:

Nat(S, U f) ≅ Nat(S*, f)

Here, U is the forgetful functor, and S* is the free applicative/pointed version of the functor S. The functor f ranges across applicative (respectively, pointed) functors.

Now we can try to substitute the free version of IStore in the Yoneda embedding formula:

Nat((Nat(IStore* a b, f), Nat(IStore* s t, f))

≅ Nat(IStore* s t, IStore* a b)

The formula holds for any applicative (pointed) functor f and a set of natural transformations over such functors.

The first step is to use the forgetful/free adjunction:

Nat((Nat(IStore a b, U f), Nat(IStore s t, U f))

≅ Nat(IStore s t, U IStore* a b)

Then we can use our defining adjunction for IStore to get:

Nat(C(a, U f b), C(s, U f t))

≅ C(s, U IStore* a b t)

In Haskell notation this reads:

forall f. Applicative f => (a -> f b) -> (s -> f t)

≅ s -> IAppStore a b t

(the action of U is implicit).

The free applicative version of IStore is defined as:

data IAppStore a b t =

Unit t

| IAppStore a (IAppStore a b (b -> t))

These are all known results, but the use of the Yoneda embedding and the adjunction to define the IStore functor makes the derivation more compact and slightly more general.

Acknowledgments

I’m grateful to Mauro Jaskelioff, Gershom Bazerman, and Joseph Abrahamson for reading the draft and providing helpful comments and to André van Meulebrouck for editing help.

Bibliography

- Edward Kmett, The Haskell Lens Library

- Simon Peyton Jones, Lenses: Compositional Data Access and Manipulation. A Skills Matter video presentation.

- Joseph Abrahamson, A Little Lens Starter Tutorial

- Joseph Abrahamson, Lenses from Scratch

- Artyom, lens over tea tutorial

- Twan van Laarhoven, CPS based functional references

- Bartosz Milewski, Lenses, Stores, and Yoneda

- Mauro Jaskelioff, Russell O’Connor, A Representation Theorem for Second-Order Functionals

- Bartosz Milewski, Understanding Yoneda

July 17, 2015 at 2:44 pm

This is great. Can you enlighten how you get from functors into Set in Yoneda to any functor? (forall f. Functor f => …) Is there some known result about what’s good for Set, is good for any CCC?

July 18, 2015 at 11:45 am

There is no general result like this. Some theorems explicitly mention Set — that would be the Yoneda lemma. But other things, like adjunctions, are valid for any category (well, locally small). Yoneda embedding deals with isomorphisms of hom-sets (or sets of natural transformations), which are sets. Even those can be generalized, though, if you decide to work with enriched categories.

Haskell itself is a weird client of category theory, because at its core is based on typed lambda calculus, which is a model of CCC; but then it has to deal with non-strictness, which takes it outside of it. So if one can prove theorems that are not tied to Set than they will be valid outside of the strict subset of Haskell. At least that’s the general idea.

July 22, 2015 at 4:07 pm

You state this so confidently, even though anybody can define additional two-valued type in one second.

July 23, 2015 at 11:48 am

Valentin: And guess what: They are all equivalent to Bool.

March 15, 2018 at 3:08 am

I think that this work is related

https://github.com/hablapps/stateless

“Optics provide abstractions and patterns to access and update immutable data structures. They compose, both homogeneously and heterogeneously, so they become essential to express complex data transformations in a modular and elegant way. However, optics are restricted to work solely with in-memory data structures.” “Stateless is a type class based framework that provides the means to take optic awesomeness to new settings, such as databases or microservices. To do so, it exploits optic algebras, an abstraction that generalizes monomorphic optics and enables programmers to describe the data layer and business logic of their applications in abstract terms, while keeping them completely decoupled from particular infrastructures.”

https://blog.hablapps.com/2016/11/10/lens-state-is-your-father/

So I quoted your article here:

https://github.com/hablapps/LensStateIsYourFather/issues/3

because I suspect that the verification they did in Coq (see https://github.com/hablapps/LensStateIsYourFather#2-proof-of-paternity), to show that State MonadState is the parent of Lens, is basically what you wrote here: “Substitute Store a for f and you see that a lens is nothing but a coalgebra for this functor. etc…”

June 11, 2021 at 5:39 pm

[…] Milewski’s posts on “From Lenses to the Yoneda Embedding“, “Lenses, Stores and Yoneda“, and general posts from him on Lenses, as well as […]